Aruna.devi (talk | contribs) (Created page with "<!-- metadata commented in wiki content <div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> <big>'''Prediction of EV Charging Behavior using BOA...") |

Aruna.devi (talk | contribs) |

||

| Line 707: | Line 707: | ||

==Authors’ Contribution Statement== | ==Authors’ Contribution Statement== | ||

| − | Dr. Mohandas and | + | Dr. Mohandas and Dr. Rajesh G conceived of the presented idea. Arunadevi Thirumalraj developed the theory and performed the computations. Dr. Mohandas and Dr. Rajesh G verified the analytical methods. All authors discussed the results and contributed to the final manuscript. |

==References== | ==References== | ||

Revision as of 19:06, 20 September 2023

Abstract

In smart city applications, electric vehicles (EVs) are rapidly gaining popularity due to their ability to help cut down on carbon emissions. Numerous environmental conditions, including terrain, traffic, driving style, temperature, and so on, affect the amount of energy an EV needs to operate. However, the burden on power grid infrastructure from widespread EV deployment is one of the biggest obstacles. Smart scheduling algorithms can be used to handle the rising public charging demand. Scheduling algorithms can be improved using data-driven tools and procedures to study EV charging behaviour. Predictions of behaviour, including temperature, departure time, and energy requirements, have been the focus of research on past charging data. Weather, traffic, and surrounding events are all factors that have been mostly ignored but which could improve representations and predictions. The DRA-Net, or Deep Residual Attention Network, was developed by the researchers and is used to recognize EV charging patterns. To minimize data loss, the Res-Attention component utilized tighter connections and smaller convolutional kernels (3 x 3). In addition, an Artificial Butterfly Optimisation Algorithm (BOA) model is used to fine-tune the DRA-Net's hyper-parameters. We highlight the significance of traffic and weather info for charging behaviour predictions, and the study's experimental forecasts show a considerable improvement over prior work on the same dataset. The future of electric vehicle (EV) research has been mapped out thanks to in-depth study, and as a result, EVs will soon significantly impact the auto industry.

Keywords: Electric vehicle; Deep Residual Attention Network; Artificial Butterfly Optimization Algorithm, Charging Behaviour Information, Energy Needs.

Introduction

Vehicle energy consumption is a subject that has been extensively studied and evaluated. The influence of driving styles, ITS technologies, and other intelligent systems on fuel usage was the primary focus of study until very recently [1]. Recently, the energy consumption of electric vehicles (EVs) has been included in these analyses. The goals and methods used to calculate EV energy consumption studies fall into one of many categories. Research documents the creation of energy models for optimization [2], effect analysis on energy consumption [3], and worldwide energy consumption [4] as a consequence of the adoption of EVs or hybrids. Predictions of the (all-electric) range may sometimes be made using the energy model [5]. EV consumption from data [6] or test cycles or creating a vehicle model that simulates are the two methods used to determine energy consumption. To anticipate more accurate figures for energy consumption, real-world measurements are preferable [7], but this method depends on accessible data and statistical modelling and is typically decoupled behaviour. Instead, modelling the vehicle's dynamics and drivetrain behaviour provides a direct relationship to these elements, making it easier to pinpoint the characteristics of the powertrain that have the greatest impact on fuel economy [8].

At least in the case of personal automobiles and other light transport vehicles, it is generally agreed that EVs are the future of environmentally responsible transportation [9]. This is because the cars have a feature called zero local emissions, which significantly aggregates [10]. However, EVs' battery charging and powering energy mix still have issues. This combination may result in a greater overall CO2 output due to the electricity generation process necessary for EV operation/use [11]. A growing number of manufacturers have adopted electric propulsion technology over the last few decades as a result of the many studies completed

However, progress in technology regarding the energy source of force for EVs is a long way from delivering sophisticated and energy-efficient technical and technological solutions, and certain pressing challenges must be addressed immediately in this area [12]:

- Determining a battery knowledge that offers the best low charging time, high lifecycle,

- operational safety decreased production prices;

- Calculating well-to-wheel emissions due to the vigour mix used in the charging procedure;

Some of the real obstacles to EVs' widespread penetration of the automotive market are the ones stated above, but customers' perceptions and attitudes also play a crucial role. There has been several research on customer and user sentiment towards EV uptake and purchase [13].

Despite the intriguing potential, a few things could still be improved, such as the charging period and the necessity for public charging. Even while recharging times for EVs have been substantially faster in recent years, they are still much longer than those for fueling internal combustion engine cars [14]. Extremely fast and wireless charging are two potential examples of emerging technologies that must overcome several obstacles before they can be widely used. Because of the lack of private charging stations, most EV owners must make do with public ones, which burdens the electrical system because of the EVs' high power needs [15]. Uncoordinated charging behaviour should be avoided to prevent power grid deterioration and breakdowns. Managing charging station schedules more effectively is the best option. There is a wealth of literature on efficient scheduling with data-driven methods, such as optimization and metaheuristic strategies. Charging behaviour has also been analyzed using transaction data and interviews with EV drivers to account for the influence of psychological variables.

We've devised a new method that helps remedy these drawbacks in three significant ways.

- 1. A redesigned DRA-Net was presented with a better mechanism to detect EVs' charging behaviour. This greatly enhances the model's accuracy, resilience, and localization capabilities.

- 2. Second, unlike traditional models, DRA-Net recognizes targets against complicated backgrounds.

- 3. The classification accuracy is enhanced by the BOA model's optimal weight selection in the suggested perfect.

The residue of the paper is laid out as shadows. In Part 2, you'll find the bibliography. The suggested model is explained in Section 3, the trial investigation is discussed in Section 4, and the conclusions are drawn in Section 5.

2. Related works

Ullah et al. [16] created a one-of-a-kind interpretable system to forecast where EVs would plug in. The study used two years of data on regular and fast charging events from 500 EVs in Japan. To predict customer preferences about charging stations, the XGBoost model achieved better than the other ML classifiers. Further, the recently developed SHAP approach was utilized to ascertain the importance of characteristics and the intricate nonlinear interaction effects of various station selection behaviours. Preliminary results from this study suggest that an interpretable ML model may be created by merging ML models with SHAP to predict EV drivers' preferences for charging stations.

Using the Variational-Bayesian Gaussian-mixture model and a dataset of more than 220,000 actual charging records, Cui et al. [17] demonstrate in-depth comprehension of fast-charging behaviour among EV users at public stations. The cluster model prioritizes charging-related elements such as charging energy and charging time, with an emphasis on the time spent in the home after charging, to enhance charge suggestion approaches and power distribution. Our proposed method for predicting charging behaviour is based on behavioural libraries using stacking regression technology, which was motivated by the potential future uses of the charging behaviour cluster shown in the research as mentioned earlier. The outcomes prove that the suggested paradigm can accurately anticipate and assess the relevance of charging behaviour.

To predict EV charging durations, Alshammari and Chabaan [18] utilized an Ant Colony Optimisation metaheuristic to fine-tune the parameters of an ensemble machine learning approach that XGBoost. The suggested Ensemble Machine Learning Ant Colony Optimisation (EML_ACO) method was shown to achieve training results of MAPE. The calculated values for R2 are 12.4 per cent, MAE 13.3 per cent, RMSE 21.1 per cent, and MAPE 12.4 per cent.

Zhang et al. [19] analyzed the real-world travel and charge passenger vehicles in Beijing over three months to better predict future charging needs. We provide a "multi-level & multi-dimension" framework for extracting travel and charging characteristic characteristics based on our analysis of more than 60 such components spanning electric vehicle behaviour's time, space, and energy dimensions. Next, a two-stage GMM and K-means-based clustering model will be provided to classify EV owners into six subgroups according to their shared and differentiated driving behaviours, preferences, and other factors. In addition, a trip-chain-demand forecast model is built to produce cars in a certain category, better reflecting real-world conditions. We combined the results of individual charge prediction runs at a three-dimensional resolution of 0.46 km and a temporal resolution of 15 min to accurately demonstrate the proposed model's ability to forecast future charging needs in the real world. The findings may aid in studying charging behaviours' effect on grid demand and developing a more effective charging infrastructure.

Stochastic user equilibrium (SUE) is introduced by Liu et al. [20] as a novel method for predicting the accurate spatial-temporal load in sync with traffic events. Predicting EV charging loads using SUE and trip chains is proposed to describe how EVs perform under synchronized traffic situations better precisely. Then, the expanded logit-based SUE and a similar mathematical model are projected in order to achieve more accurate traffic conditions, such as junction delays. The accusing infrastructure is designed to be compatible with a wide range of EVs, and models are in place to ensure that the entire travel chain is practical. Using a hybrid approach based on the Dijkstra-based K-shortest routes algorithms, the suggested framework is solved repeatedly with steady convergence. The suggested method's capacity to type while retaining accessibility is demonstrated by its application to a realistic traffic network. In addition, it can accurately predict the overall charging loads and the prices of individual EV trips in scenarios with complicated synchronized traffic. In particular, even when there is a substantial EV penetration with larger consistency, with even more impressive forecast efficacy, hours.

The purpose of Li et al.'s [21] research is to provide an inclusive overview of the modelling of EV behaviour and its applications for developing algorithms for EV-grid integration. Several models have been developed to capture the complexities of adopting electric vehicles (EVs), charging decisions, and user acceptance of smart charging infrastructure. Specifically, this article explains the preexisting models, including temporal, geographical, and energy dimensions, and describes the numerous sub-models of charging preference and responsiveness to smart charging. The authors propose a novel EV behaviour modelling paradigm that may guide model selection for different application contexts by creating model portfolios for time, space, energy consumption, charging preference, and reactivity. As a result, we talk a lot about how to model EV behaviour to predict EV charging demands and schedule charging logically. Future research directions are discussed, and in-depth behavioural insights are offered that might be utilized to develop effective EV behaviour models that propel EV-grid integration.

Koohfar et al. [22] employ machine learning algorithms to predict EV charging demand, including RNNs, LSTMs, Bi-LSTMs, and modifiers. Data collected over five years from 25 public charging stations in the USA confirms the accuracy of this approach. Compared to other techniques, it performs better at predicting charging requirements, representing its value for time series forecasting glitches.

.

.

3. Methodology

The methodology for estimating charge patterns is described below. We define the issue, outline the dataset, emphasize data pretreatment, and go over training strategies for several learning models.

3.1. EV charging behaviour

Assuming depicts the charging behaviour during a session, where t_join represents the period, the car initially plugs in, t_discon reflects the period the car unplugs, and e indicates the total amount of energy supplied to the car. as subsequent:

|

|

(1) |

Based on the overhead, we can express the length of accusing meeting or the session period, , as follows:

|

|

(2) |

3.2. Dataset description

In order to forecast charging actions, we use data on events in addition to the charging information. We will provide a high-level overview of the datasets we employed, focusing on their salient features.

The unpredictability of charging behaviour makes scheduling EV charging more important in public charging facilities, such as those seen in areas like shopping malls. One of the few publicly accessible datasets for commercial EV charging, the ACN [23] dataset will be used in this study. The collection includes charging statistics from two campus stations (JPL and Caltech). The JPL station will not be evaluated because it is restricted to JPL workers exclusively, unlike the Caltech station. By scanning a QR code with their mobile apps, registered users can information, such as their projected leaving time and desired energy. There is a web gateway and a python API available at [24] for accessing the dataset.

Caltech does have a tiny weather station [25], but we didn't use it because of missing results and uneven interval records for the wind variable. Furthermore, this record weather conditions that might have an effect on charging behaviour, such as precipitation and snowfall. Since the actual position of the charging station is dependent on the weather, we used NASA's) [26]. Satellite weather data have been compared to those from ground stations for accuracy in [27]. While it has been established that ground stations are better able to identify some weather aspects at a given place, for the sake of this work, we are not interested in pinpoint precision but rather a more holistic understanding of how weather affects charging behaviours. For instance, we want to see how different weather conditions, such as heavy rain vs. dryness, affect charging behaviour.

It might be difficult to track down reliable historical traffic information for certain routes and regions. Both invasive techniques like road tubes and piezoelectric sensors, and noninvasive ones like microwave radar and video image recognition are used in traditional methods of traffic data collecting [28]. Scalability is a problem with these methods, and in most situations, only certain highways are covered. For instance, Pasadena (the source of the charge data) has an open data site [29] that details the city's daily traffic volume. Nonetheless, it covers traffic count over some period of time for most routes in the city, rendering it useless as we need data collected at regular intervals. Furthermore, not all streets and highways are protected. Therefore, we choose to utilize Google Maps traffic data, which has already been utilized in machine learning applications [30]. If commuters use the app and opt-in to sharing their location, the data is obtained by tracking the devices' precise whereabouts. To alleviate privacy concerns, gathered data is de-identified and aggregated before being made public [31]. The information may be retrieved using the Google Maps Distance Matrix API. The departure time and journey time between a specified set of coordinates for the origin and destination are calculated and returned. We gathered travel time data for the nine roads and streets closest to the charging station.

Considering the location on the Caltech campus, we thought it would be interesting to examine whether or not the frequency with which campus activities occur influences charging patterns. Caltech's online calendar [32] was consulted to determine how many events occur per hour. To keep things simple, we rounded times to the nearest hour, so that something that began at 10:20 a.m. would be recorded as having begun at 10 a.m.

.

3.3. Data preprocessing

To guarantee accurate predictions, it is essential to clean and preprocess the dataset. Among these is the elimination of anomalies and inaccurate data. Model performance may suffer if outliers are present. Boxplots are frequently used for this purpose. Figure 1 shows that there were outliers in the boxplots for both dependent variables. We see that the distribution of outlying values is not the same for both variables; in this case, there are much more extreme values for energy use than for session length. Some cars may have much higher energy consumption, even if the sitting time is not very long..

For this reason, we elected to build an ensemble of trees using the isolation forest approach to conduct multivariate outlier identification. iTree cases with low average path lengths are the ones that stand out [33]. The observations are 'separated' by picking a variable at random and then choosing a split value among the variable's lowest and maximum. The comments are partitioned recursively until they are all isolated. Shorter path length data for certain places after partitioning are likely to be anomalies. With the axes normalized for both reply variables, the method of identifying the outlier of variables is depicted in Figure 3. Six hundred and ninety-seven anomalies, or 4% of all observations, were found.

We only examined 97% of the records for the charge data since they were registered charging records, i.e. they had user IDs. We utilized the pytz [34] module in Python to transform the weather data, which was originally recorded in universal time, to the same time zone as the billing records. Additionally, we performed a temperature conversion from kelvin to Celsius. We then calculated the average weather during the preceding seven hours and the following ten hours for each hour, both of which were confirmed experimentally to be accurate representations. In doing so, we may learn how the state of the weather, both before and after charging, affects the choice to charge. For instance, reduced charging duration can be explained by the preceding hours' heavy snowfall. For the traffic information, we also had to adjust the time zone from Coordinated Universal Period. We then added up the sum of vehicles on the nine thoroughfares and streets throughout the course of each hour. It's important to remember that we included in both the median and maximum travel times predicted by Google Maps. At last, we added up all the campus activities for each hour.

The pandas [35] package was used to transform the time-series fields into date-time objects, which allowed for easier data merging. We then saved the closest time belonged in order to acquire weather, traffic, and events for a convinced charge record. For instance, a connection time of 22:11 indicates that it is currently 10 p.m. Because of this, we can simply parse out the remaining data. We didn't just pick the volume of traffic at a particular hour; we picked the volume of traffic from the time we got there till the time we left. For example, if a car checked in at 2:00 p.m., we included that car's arrival as part of the day's total traffic count. This would teach the model how changes in traffic volume affect pricing. Similarly, we accounted for everything that happened from the time we landed to the close of the day.

.

3.4. Classification using Deep Learning Networks

With the advent of deep residual networks, deep learning's capacity for characterization and learning has been substantially enhanced, making this area of study increasingly popular among categorization researchers. When performing feature extraction using a standard deep convolutional neural network, the deep residual network may take use of the residual construction to maximize the info loss induced by the convolution procedure. This significantly enhances the reliability of rice disease detection. In 2017, a deep residual model called DenseNet was suggested at CVPR as a new type of network model. To promote feature reuse, the model employs tightly coupled connectivity, wherein all levels have access to the feature maps of their previous layers. This directly contributes to the model becoming more condensed and robust against overfitting. It also enables implicit deep supervision by having the loss function directly supervise each layer through a shortcut path..

3.4.1. Dense Connection

DenseNet is an alternative connection design to traditional networks that maximizes information transfer during picture recognition by employing dense connectivity. This indicates that in DenseNet, all of the layers above it are linked together. The goal is to maximize the transfer of knowledge between networks by having each successive layer use the feature maps it has obtained layer as input to the next layer.

|

|

(3) |

where xl is input to layer l, x_0 finished x_(l-1) are the layer l from which layer l receives input, (x_0,x_1,,x_(l-1)) are the chin maps output by the layers beforehand layer l that are being combined, and H_l is the compound purpose that gears the join features in the reckoning, including the batch layer,.

Figure 2 depicts the overall DenseNet structure. Table 1 displays the DenseNet-121 net topology.

| Kernel Size | Stride | Output | Network Layer |

| - | - | (3D-tensors,128,56,56) | Transition (1) |

| - | - | (3D-tensors,512,56,56) | Dense Block (2) |

| - | - | (3D-tensors,3,224,224) | Input |

| 7 | 2 | (3D-tensors,64,112,112) | Conv2d |

| - | - | (3D-tensors,256,112,112) | Dense Block (1) |

| - | - | (3D-tensors,256,28,28) | Transition (2) |

| - | - | (3D-tensors,1024,28,28) | Dense Block (3) |

| - | - | (3D-tensors,512,14,14) | Transition (3) |

| - | - | (3D-tensors,1024,14,14) | Dense Block (4) |

| 1 | 1 | (3D-tensors,1024,1,1) | AvgPool |

| - | - | (1024, class_num) | Classification |

3.4.2. Transition

In DenseNet, downsampling operations are handled by the transition module. For the model to be beaten to lower the sum of channels dense module, the changeover module primarily conducts two operations: pooling, with an layer of (2 2).

3.4.3. Attentional Mechanisms

When processing an image, network to focus on the parts of the duplicate that will have the most impact on the final result. The convolutional neural network may dynamically modify its focus thanks to the attention mechanism. natural language processing have both benefited from the application of attention methods. Recurrent neural networks with have found widespread use in sequential models. There are two main categories of attention mechanisms: channel attention and spatial attention. Both CBAM models were employed in this work.

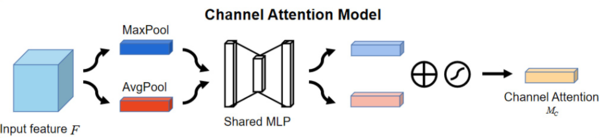

Assigning attention weights successively along follows the provision of an intermediate feature map of the procedure. The adaptive adjustment is then calculated by multiplying the original feature map by the weights. Figure 4 depicts the CBAM module's two sub-modules, the spatial.

3.4.4. The Channel Attention Mechanism Unit

The channel care apparatus first receipts the map and permits it through global maximum regular pooling to get two maps . A two-layer multilayer with C/r in the first layer C neurons in the second layer (where C is the original number of neurons) is then applied to the generated feature maps in a shared network. After then, the instrument M_C (F) adds up the individual features from the shared network's output to produce the final, full feature vector. Equation (4) depicts the formula for the channel attention apparatus.

|

|

(4) |

where function; , and the MLP weights W0 and inputs; and is after the ReLU purpose.

3.4.5. Spatial Attention Mechanism Module

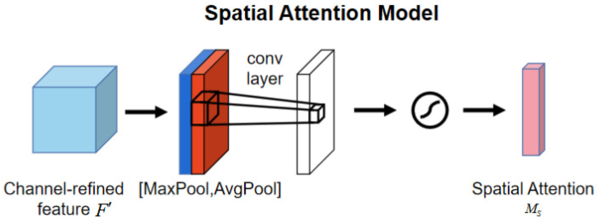

The feature vectors generated by the channel care apparatus module are used as inputs to the spatial attention mechanism. Two feature vectors are generated from the feature vector by first using a maximum pooling process and then an process. and , respectively. Once the maximum and average pooled characteristics have been summed, a channel splicing operation is performed on them. After that, a convolutional convolution operation (7 7) is performed on the feature vectors to collapse them down to a single dimension. The feature vector is derived after a sigmoid function is applied. .

|

|

(5) |

where symbolizes the sigmoid purpose and signifies the process with a difficulty kernel of size (7×7).

3.4.6. Res-Attention

In this research, we built upon the CBAM module to create a novel attention mechanism we name the Res-Attention module. The module was developed to improve gearbox reliability by including a residual structure. When the feature map is sent into the model in the Attention component, some of the information is remembered. When the model is linked after the model, the info produced from the module is combined with the info that was previously preserved. The module then sends out the combined feature data. Figure 5 depicts the Res-Attention module's internal construction.

3.4.7. DRA-Net

In this research, we examined and built the DRA-Net network perfect by integrating the unit into the perfect to better predict charging behaviour. The DRA-Net architecture is made up of a set of interconnected and layered modules called Dense Blocks, Res-Attention Modules, and Transition Layers. The study attached the module to the Transition module after positioning module. A transition module linked the first three modules together, whereas the fourth ResAttention module was linked straight to the classification layer. Lastly, we adjusted the Classification Layer's output characteristics.

The Dense Block's feature maps are sent straight to the module in the DRA-Net network model, where they are processed by the Perfect and the Spatial Attention Model. Figure 6 depicts the overall layout of the DRA-Net system.

.

3.4.8. Hyper-parameter tuning using Artificial Butterfly Algorithm

- 1) Exploring the effects of life history optimization on lifetime speculation in violent conduct [36] may be done with countless success using system. You can get some great ideas for a new optimization technique from spotted trees. We introduced the Artificial Butterfly Optimisation (ABO) technique, which is inspired by the mating behaviour of speckled woodlands. Table 2 displays the ABO algorithm's pseudo code. The ABO procedure follows a set of criteria that idealize the way butterflies locate a partner.

- 1. If a male butterfly wants to increase his chances of mating with a female, he will fly to a better location known as a sunspot.

- 2. Each sunspot butterfly is perpetually on the lookout for a new home in a neighbouring sunspot.

- 3. Every canopy butterfly aggressively pursues every sunspot butterfly in a never-ending battle for dominance.

.

|

|

Table 2 shows that the initial populace of butterflies was split in half along fitness lines. Each squad is given its own unique plan of action for the flight. At this stage, there are some parallels to niching strategies. In order to professionally locate numerous optimum solutions, a niching approach is typically employed to change the behaviour of a classical procedure to preserve different groups in the selected populace component. There are three distinct flying modes: sunspot, canopy, and free flight. Various flight techniques are obtainable for these modes. That modes is given a diverse flying policy, ABO can produce a new-fangled algorithm.

Here, we outline three distinct ways a digital butterfly may take to the air. In this study, the position of a digital butterfly is characterized by a vector of D dimensions. According to Eq. (6), each butterfly travels in the way of a neighbour chosen at accidental. This tactic is implemented in the mode.

.

|

|

(6) |

where is the butterfly. is a arbitrarily designated breadth index among is the number of iterations, makes a accidental sum among , and is a randomly designated butterfly. Here, is diverse from .

Each butterfly flies in the way of a neighbour chosen at accidental using Eq. (7). This tactic is applied in the ABO procedure's sunspot flying mode.

|

|

(7) |

where is the ith computer-generated butterfly, is the sum of iterations, is the novel site of the ith computer-generated butterfly, is coldness, produces a accidental sum between (0,1) and k is a arbitrarily designated butterfly. Here, is diverse from . is the lower butterfly, and is the flying variety of the ith. and are pertinent to a specific problem.

According to Eq. (8), each butterfly travels in the way of a randomly chosen neighbour. In the exploration phase, the same method has been used to look for a new place. The ABO algorithm employs this tactic during its free-flight phase.

|

|

(8) |

where i is the computer-generated butterfly, is the novel linearly reduced from course of repetition and rand() produces a accidental sum among (0,1). k is a arbitrarily designated butterfly. D is a arbitrarily shaped value subsequent Eq. (9).

|

|

(9) |

where i characterizes the question and k characterizes a butterfly selected at chance, and rand() foodstuffs a accidental number among 0 and 1.

In Eq. (7), the value of the parameter step is arbitrary. Methods for reducing are implemented in accordance with Eq. (10). Over time, step will progressively diversity may be attained in the first phases with a greater phase value. In the final phase, better convergence is achieved with smaller step values since massive leaping is avoided.

.

|

|

(10) |

where is present assessments count and is the max assessments count.

4. Results and Discussion

To guarantee that we account for seasonal effects during training, we only used ACN dataset that occurred in the year 2019. We used 80% of the data for model training and 20% for assessing its performance. As part of the training process, we used validation, in which the procedures are trained a total of K periods, with a subset of 1/K training instances used for evaluation each time. As a K value, we went with the standard 10. Models for session length are described below in Table 3.

| Model | Precision (%) | Recall (%) | Accuracy (%) | F1 Score |

| ResNet-101 | 97.67 | 97.82 | 97.63 | 97.7 |

| DenseNet-121 | 97.87 | 97.87 | 97.86 | 97.8 |

| AlexNet | 91.65 | 92.62 | 90.37 | 91.16 |

| Vgg-19 | 93.67 | 93.30 | 93.36 | 93.04 |

| MobileNet | 95.07 | 94.57 | 94.65 | 94.8 |

| BOA-DRA-Net | 99.72 | 99.70 | 99.71 | 99.06 |

In Table 3 characterize that the Test notches for session duration. In the analysis of AlexNet model attained the accuracy range of 90.37 then the exactness as 91.65 and also the recall range as 92.62 and lastly the F1-score as 91.16 correspondingly. Then the Vgg-19 model accomplished the accuracy range of 93.36 and the exactness as 93.67 and also the recall range as 93.30 and lastly the F1-score as 93.04 correspondingly. Then, the MobileNet model accomplished an accuracy range of 94.65, a precision of 95.07, a recall range of 94.57, and lastly, an F1-score of 94.8. Then, the ResNet-101 model attained an accuracy range of 97.63, a precision of 97.67, a recall range of 97.82, and an F1-score of 97.7. Then, the DenseNet-121 model attained an accuracy range of 97.86, a precision of 97.87, a recall range of 97.87, and an F1-score of 97.8. Then, the BOA-DRA-Net model attained an accuracy range of 99.71, an exactness of 99.72, a recall range of 99.70, and an F1-score of 99.06 correspondingly.

| Model | Precision (%) | Recall (%) | Accuracy (%) | F1 Score |

| AlexNet | 87.87 | 85.95 | 83.88 | 86.18 |

| ResNet-101 | 90.82 | 90.25 | 90.10 | 90.15 |

| DenseNet-121 | 92.75 | 92.82 | 92.52 | 92.7 |

| Vgg-19 | 85.57 | 83.57 | 84.20 | 84.55 |

| MobileNet | 87.90 | 85.67 | 85.49 | 86.67 |

| BOA-DRA-Net | 97.95 | 97.87 | 97.86 | 97.49 |

In above TABLE 4 signifies that the Test scores for energy consumption. In the investigation of the AlexNet model, the accuracy rate was 83.88, the precision rate was 87.87, the recall range was 85.95, and lastly, the F1 score was 86.18. At that moment, the Vgg-19 model accomplished an accuracy rate of 84.20, and then the precision rate of 85.57, and then the recall range of 83.57 , and lastly F1-score of 84.55 correspondingly. At that moment, the MobileNet model attained an accuracy rate of 85.49, a precision rate of 87.90 , a recall range of 85.67, and a score of 86.67. At that moment, the ResNet-101 model accomplished an accuracy rate of 90.10, and then the precision rate of 90.82, and then the recall range of 90.25, and lastly F1-score of 90.15 correspondingly. At that moment, the DenseNet-121 model accomplished an accuracy rate of 92.52, a precision rate of 92.75, a recall range of 92.82 , and lastly -a score of 92.7. At that moment, the BOA-DRA-Net model accomplished an accuracy rate of 97.86, a precision rate of 97.95, a recall range of 97.87, and lastly, the F1-score of 97.49 correspondingly.

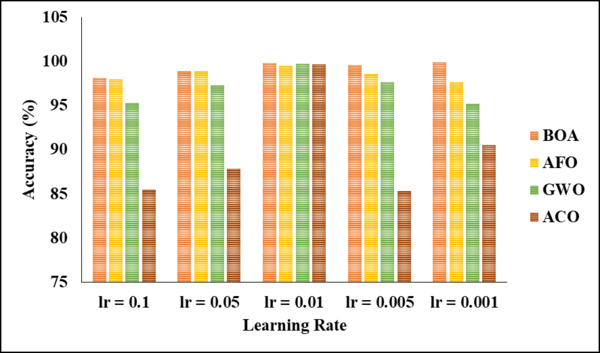

| Optimization Algorithm | lr = 0.1 | lr = 0.05 | lr = 0.01 | lr = 0.005 | lr = 0.001 |

| GO | 95.25 | 97.26 | 99.71 | 97.68 | 95.21 |

| ACO | 85.44 | 87.82 | 99.65 | 85.32 | 90.56 |

| BOA | 98.11 | 98.90 | 99.80 | 99.56 | 99.88 |

| AFO | 97.97 | 98.85 | 99.53 | 98.55 | 97.66 |

In above Table 5 characterize that the Diverse optimization procedures and learning rates in terms of accuracy. In the analysis of BOA Optimization Algorithm in lr = 0.01 as accuracy of 98.11 and 0.05 learning rate accuracy of 98.90 and also, the 0.01 learning rate accuracy of 99.80 and learning rate of 0.005, the accuracy as 99.56 and 0.001 learning rate accuracy as 99.88 correspondingly. At that instant ZFO Optimization Algorithm in lr = 0.01 as accuracy of 97.97 and 0.05 learning rate correctness of 98.85 and learning rate of 0.005, the accuracy as99.53 and learning rate of 0.005, the accuracy as 98.55 and 0.001 learning rate accuracy as 97.66 correspondingly. At that instant GWO Optimization Algorithm in lr = 0.01 as accuracy of 95.25 and 0.05 learning rate accuracy of 97.26 and learning rate of 0.005, the correctness as 99.71 and learning rate of 0.005, the accuracy as 97.68 and 0.001 learning rate accuracy as 95.21 correspondingly. At that instant ACO Optimization Algorithm in lr = 0.01 as accuracy of 85.44 and 0.05 learning rate accuracy of 87.82 and learning degree of 0.001, the accuracy as 99.65 and learning degree of 0.005, the exactness as 85.32 and 0.001 learning rate accuracy as 90.56 correspondingly.

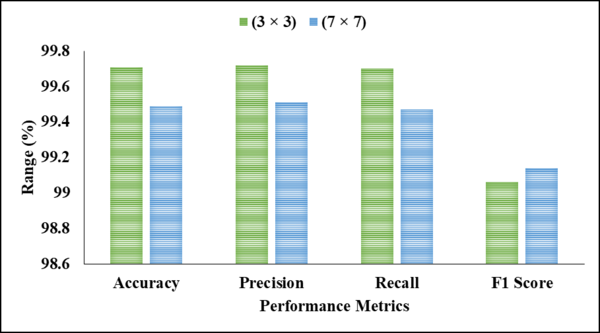

| Kernel Size | Precision | Recall | Accuracy | F1 Score |

| (3 × 3) | 99.72 | 99.70 | 99.71 | 99.06 |

| (7 × 7) | 99.51 | 99.47 | 99.49 | 99.14 |

In above Table 6 signifies that the Comparison of diverse convolution kernel sizes. In the analysis of (3 × 3) kernel size, the accuracy as 99.71 and exactness as 99.72 and the recall rate as 99.70 and lastly the F1- as 99.06 correspondingly. In next the (7 × 7) kernel size, the correctness as 99.49 and exactness as 99.51 and the memory rate as 99.47 and lastly the F1- as 99.14 correspondingly.

| Model | Parameters/MB | FLOPs/GB |

| AlexNet | 61.10 | 0.71 |

| ResNet-101 | 44.55 | 7.87 |

| DenseNet-121 | 7.98 | 2.90 |

| Vgg-19 | 143.67 | 19.63 |

| MobileNet | 3.50 | 0.33 |

| BOA-DRA-Net | 7.56 | 2.90 |

Table 7 above shows the contrast between computational complexity. The FLOPs/GB Computational Complexity and Parameters/MB values in the AlexNet model were respectively 0.71 and 61.10. The Vgg-19 model then achieved the corresponding FLOPs/GB Computational Complexity of 19.63 and Parameters/MB of 143.67. In the subsequent stage, the MobileNet model achieved FLOPs/GB Computational Complexity of 0.33 and Parameters/MB of 3.50, respectively. In the subsequent stage, the ResNet-101 model achieved FLOPs/GB Computational Complexity of 7.87 and Parameters/MB of 44.55, respectively. The DenseNet-121 model then achieved FLOPs/GB Computational Complexity of 2.90 and Parameters/MB of 7.98, respectively. The BOA-DRA-Net model then achieved FLOPs/GB Computational Complexity of 2.90 and Parameters/MB of 7.56 in that order.

5. Conclusion and Future Work

In this broadside, we projected a methodology for the forecast of EV session time and energy usage, two of the most relevant charging behaviours with respect to scheduling. In contrast to other efforts, we also made use of data on traffic, events, and the weather in addition to the historical billing information. To improve the residual connections and make them better suited for the identification process, the researchers in this study developed BOA-DRA-Net, which combined the Res-Attention unit with the deep network to generate DRA-Net. The acquired findings outperform those of prior research in terms of prediction presentation. The research has also shown the possibility of using traffic and weather info in charging behaviour prediction, and it has significantly improved charging behaviour forecast on the ACN dataset. The key drawback of the study was the limited scope of the dataset, which prevented rigorous model comparisons. The validity and applicability of the consequences might be compromised as a result of this. This may necessitate further investigation in the form of data collection in the near future. The dataset might be made larger and more representative by investigating data augmentation methods. Using less resource-intensive algorithms may also help lessen the need for massive amounts of data.

Conflicts of interest: none.

Submission declaration and verification

The work described has not been published previously.

Authors’ Contribution Statement

Dr. Mohandas and Dr. Rajesh G conceived of the presented idea. Arunadevi Thirumalraj developed the theory and performed the computations. Dr. Mohandas and Dr. Rajesh G verified the analytical methods. All authors discussed the results and contributed to the final manuscript.

References

[1] Kavianipour, M., Fakhrmoosavi, F., Singh, H., Ghamami, M., Zockaie, A., Ouyang, Y., & Jackson, R. (2021). Electric vehicle fast charging infrastructure planning in urban networks considering daily travel and charging behavior. Transportation Research Part D: Transport and Environment, 93, 102769.

[2] Wang, Y., Yao, E., & Pan, L. (2021). Electric vehicle drivers’ charging behavior analysis considering heterogeneity and satisfaction. Journal of Cleaner Production, 286, 124982.

[3] Cao, W., Wan, Y., Wang, L., & Wu, Y. (2021). Location and capacity determination of charging station based on electric vehicle charging behavior analysis. IEEJ Transactions on Electrical and Electronic Engineering, 16(6), 827-834.

[4] Xiong, Y., An, B., & Kraus, S. (2021). Electric vehicle charging strategy study and the application on charging station placement. Autonomous Agents and Multi-Agent Systems, 35, 1-19.

[5] Baghali, S., Hasan, S., & Guo, Z. (2021, April). Analyzing the travel and charging behavior of electric vehicles-a data-driven approach. In 2021 IEEE Kansas Power and Energy Conference (KPEC) (pp. 1-5). IEEE.

[6] Calearo, L., Marinelli, M., & Ziras, C. (2021). A review of data sources for electric vehicle integration studies. Renewable and Sustainable Energy Reviews, 151, 111518.

[7] Lee, Z. J., Lee, G., Lee, T., Jin, C., Lee, R., Low, Z., ... & Low, S. H. (2021). Adaptive charging networks: A framework for smart electric vehicle charging. IEEE Transactions on Smart Grid, 12(5), 4339-4350.

[8] Shahriar, S., Al-Ali, A. R., Osman, A. H., Dhou, S., & Nijim, M. (2021). Prediction of EV charging behaviour using machine learning. Ieee Access, 9, 111576-111586.

[9] Adenaw, L., & Lienkamp, M. (2021). Multi-criteria, co-evolutionary charging behavior: An agent-based simulation of urban electromobility. World Electric Vehicle Journal, 12(1), 18.

[10] Muratori, M., Alexander, M., Arent, D., Bazilian, M., Cazzola, P., Dede, E. M., ... & Ward, J. (2021). The rise of electric vehicles—2020 status and future expectations. Progress in Energy, 3(2), 022002.

[11] Tan, Z., Yang, Y., Wang, P., & Li, Y. (2021). Charging behavior analysis of new energy vehicles. Sustainability, 13(9), 4837.

[12] Li, S., Hu, W., Cao, D., Dragičević, T., Huang, Q., Chen, Z., & Blaabjerg, F. (2021). Electric vehicle charging management based on deep reinforcement learning. Journal of Modern Power Systems and Clean Energy, 10(3), 719-730.

[13] Alkawsi, G., Baashar, Y., Abbas U, D., Alkahtani, A. A., & Tiong, S. K. (2021). Review of renewable energy-based charging infrastructure for electric vehicles. Applied Sciences, 11(9), 3847.

[14] Barthel, V., Schlund, J., Landes, P., Brandmeier, V., & Pruckner, M. (2021). Analyzing the charging flexibility potential of different electric vehicle fleets using real-world charging data. Energies, 14(16), 4961.

[15] Bertin, L., Lorenzo, K., & Boukir, K. (2021, June). Smart charging effects on Electric Vehicle charging behavior in terms of Power Quality. In 2021 IEEE Madrid PowerTech (pp. 1-5). IEEE.

[16] Ullah, I., Liu, K., Yamamoto, T., Zahid, M., & Jamal, A. (2023). Modeling of machine learning with SHAP approach for electric vehicle charging station choice behavior prediction. Travel Behaviour and Society, 31, 78-92.

[17] Cui, D., Wang, Z., Liu, P., Wang, S., Zhao, Y., & Zhan, W. (2023). Stacking regression technology with event profile for electric vehicle fast charging behavior prediction. Applied Energy, 336, 120798.

[18] Alshammari, A., & Chabaan, R. C. (2023). Metaheruistic Optimization Based Ensemble Machine Learning Model for Designing Detection Coil with Prediction of Electric Vehicle Charging Time. Sustainability, 15(8), 6684.

[19] Zhang, J., Wang, Z., Miller, E. J., Cui, D., Liu, P., & Zhang, Z. (2023). Charging demand prediction in Beijing based on real-world electric vehicle data. Journal of Energy Storage, 57, 106294.

[20] Liu, K., & Liu, Y. (2023). Stochastic user equilibrium based spatial-temporal distribution prediction of electric vehicle charging load. Applied Energy, 339, 120943.

[21] Li, X., Wang, Z., Zhang, L., Sun, F., Cui, D., Hecht, C., ... & Sauer, D. U. (2023). Electric vehicle behavior modeling and applications in vehicle-grid integration: An overview. Energy, 126647.

[22] Koohfar, S., Woldemariam, W., & Kumar, A. (2023). Performance Comparison of Deep Learning Approaches in Predicting EV Charging Demand. Sustainability, 15(5), 4258.

[23] Z. J. Lee, T. Li, and S. H. Low, ‘‘ACN-data: Analysis and applications of an open EV charging dataset,’’ in Proc. 10th ACM Int. Conf. Future Energy Syst., New York, NY, USA, 2019, pp. 139–149.

[24] ACN-Data—A Public EV Charging Dataset. Accessed: Jul. 2, 2020. [Online]. Available: https://ev.caltech.edu/dataset

[25] TCCON Weather. Accessed: Jul. 2, 2020. [Online]. Available: http://tcconweather.caltech.edu/

[26] R. Gelaro, W. McCarty, M. J. SuÆrez, R. Todling, A. Molod, L. Takacs, C. A. Randles, A. Darmenov, M. G. Bosilovich, R. Reichle, and K. Wargan, ‘‘The modern-era retrospective analysis for research and applications, version 2 (MERRA-2),’’ J. Climate, vol. 30, pp. 5419–5454, Jul. 2017, doi: 10.1175/JCLI-D-16-0758.1.

[27] R. Mendelsohn, P. Kurukulasuriya, A. Basist, F. Kogan, and C. Williams, ‘‘Climate analysis with satellite versus weather station data,’’ Climatic Change, vol. 81, no. 1, pp. 71–83, Mar. 2007, doi: 10.1007/s10584-006-9139-x.

[28] G. Leduc, ‘‘Road traffic data: Collection methods and applications,’’ Work. Papers Energy, Transp. Climate Change, vol. 1, pp. 1–55, Nov. 2008.

[29] Pasadena Traffic Count Website. Accessed: Jan. 21, 2021. [Online]. Available: https://data.cityofpasadena.net/datasets/eaaffc1269994f0e8966e2024647cc56

[30] F. Goudarzi, ‘‘Travel time prediction: Comparison of machine learning algorithms in a case study,’’ in Proc. IEEE 20th Int. Conf. High Perform. Comput. Commun., IEEE 16th Int. Conf. Smart City; IEEE 4th Int. Conf. Data Sci. Syst. (HPCC/SmartCity/DSS), Jun. 2018, pp. 1404–1407, doi: 10.1109/HPCC/SmartCity/DSS.2018.00232.

[31] D. Barth, The Bright Side of Sitting in Traffic: Crowdsourcing Road Congestion Data. Mountain View, CA, USA: Google Official Blog, 2009.

[32] Calendar | www.caltech.edu. Accessed: Jan. 23, 2021. [Online]. Available: https://www.caltech.edu/campus-life-events/master-calendar.

[33] F. T. Liu, K. M. Ting, and Z.-H. Zhou, ‘‘Isolation forest,’’ in Proc. 8th IEEE Int. Conf. Data Mining, Dec. 2008, pp. 413–422, doi: 10.1109/ICDM.2008.17.

[34] S. Bishop. (2016). Pytz-World Timezone Definitions for Python. Accessed: May 25, 2012. [Online]. Available: http://pytz.sourceforge.net

[35] W. McKinney, ‘‘Pandas: A foundational Python library for data analysis and statistics,’’ Python High Perform. Sci. Comput., vol. 14, no. 9, pp. 1–9, 2011.

[36] Sadeghian, Z., Akbari, E., & Nematzadeh, H. (2021). A hybrid feature selection method based on information theory and binary butterfly optimization algorithm. Engineering Applications of Artificial Intelligence, 97, 104079.

Document information

Published on 03/05/24

Accepted on 24/04/24

Submitted on 28/02/24

Volume 40, Issue 2, 2024

DOI: 10.23967/j.rimni.2024.02.002

Licence: CC BY-NC-SA license

Share this document

Keywords

claim authorship

Are you one of the authors of this document?