| Line 1,039: | Line 1,039: | ||

| colspan='2' style="border: 1pt solid black;text-align: center;"|<big>'''Equipment'''</big> | | colspan='2' style="border: 1pt solid black;text-align: center;"|<big>'''Equipment'''</big> | ||

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|'''Equipment''' |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|'''Cost (€)''' |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Animal liver |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|7 |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|15' CT scan with contrast |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|228* |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|3D printing of the models |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|110 |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|1 Macbook Air computer |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|899 |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|1 Dell XPS 15 computer |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|1500 |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Hololens™ Development Edition |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|3299 |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Microsoft |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Free (under UB identification) |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|3D Slicer |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Free |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Meshlab |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Free |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|MeshMixer |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Free |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|GiD |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Free (for 1 month trial) |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Unity |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|Free |

|- | |- | ||

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|'''TOTAL''' |

| − | | style="border | + | | style="border: 1pt solid black;text-align: center;"|'''6043''' |

|} | |} | ||

Revision as of 11:52, 30 May 2019

Acknowledgements

I would like to thank all those who have helped me to carry out this thesis project, without whom none of this would have been possible.

First, I would like to thank my director, Eduardo Soudah. Thank you for all the skypes, the doubts, the questions, the answers, for having his door always open and for his invaluable help along the whole project. Even though he is a Real Madrid fan, working with him was an incredible opportunity and I wish all students had a thesis director as funny and helpful as Eduardo.

Second, I would like to thank Ignacio Valero. Not only for introducing me to Dr. Torrent but also for helping me whenever I need some advice about my future. It is a privilege to have a counsellor of the greatness of Ignacio. Thirdly, I would like to thank Dr. Torrent for allowing an undergraduate student into one of his surgeries, for answering all of my curious questions and above all, for wanting to innovate in the medical field.

Next, I would like to thank PhD student Oscar de Coss for explaining me the complicated world of augmented reality and for doing so with such grace and patience (even on a Friday night). Also, a special thank you to Dr. Pavia, my tutor, for providing his advice from a clinician's point of view.

Moreover, thank you to the staff in the Nuclear Imaging Department from Hospital Clinic who helped me take the CT scan and to the CIMNE staff for helping me with the post-processing. Also, thank you to J. S. Witowski for helping me with the software 3D Slicer for the segmentation of images, one seldom finds people who have such a willingness to help without even knowing who he or she is helping to.

Finally, I must express my very deepest gratitude to my parents, to my sister and to Miguel for providing me with continuous support throughout my years of study. I would not have been able to complete the final thesis of my degree without their constant love and encouragement.

Abstract

Half of all patients diagnosed with colorectal cancer develop liver metastasis during the course of their disease. Surgery provides the best opportunity for long-term survival and even the chance for cure. In order to achieve an adequate tumor resection, the surgeon must have precise knowledge of the tumor location relative to the surrounding major vascular structures. However, in order to do so, current surgeons have to continually look away from the patient to see the patient CT scan or MRI on a nearby monitor. Thus, there is then a clinical need for a better system that can assist surgeons.

In this project, the feasibility of using augmented reality (AR) to assist open liver surgeries was studied. Separate volumes of liver surface, vascular and tumor structures were delineated from an animal's liver CT scan. These were then 3D printed and further rendered by means of a custom application within the HoloLens™ head-mounted display. The accuracy of image registration was subjectively evaluated by checking the alignment of the visible structures between the virtual model and the 3D printed model. By using a combination of tracked hand gestures and voice commands, the AR setup developed successfully indicated with high accuracy the location of the tumor and vasculature of the liver model. These significant results suggest that the superimposition of structures onto the patient liver could facilitate the orientation and navigation during a surgery. This would result in the improvement of tumor resection, leading to an increasing patient safety.

1. Introduction

1.1 Motivation

Colorectal cancer (CRC) is one of the most common cancers in the world. In Spain, nearly a quarter of a million new invasive cancer cases were diagnosed in 2015: almost 149.000 in men (60.0%) and 99.0000 in women [1]. Incidence and mortality rates have been declining for several decades because of historical changes in risk factors (e.g. decreased smoking and red meat consumption and increased use of aspirin), the introduction and dissemination of screening tests, and improvements in treatment [2].

Despite these advances, approximately half of all patients diagnosed with CRC will develop liver metastasis (LM) during the course of their diseases. When left untreated, colorectal LM is rapidly and uniformly fatal with a median survival measured in months. Surgical resection provides the best opportunity for long-term survival and even the chance for cure, and so it is the current paradigm of treatment [3].

In oncosurgical approach to LM, the surgeon faces two major challenges: complete removal of the tumor and avoidance of damage to secondary structures such as blood vessels. In order to achieve an adequate tumor resection, with good oncologic outcomes and without injury to and needless sacrifice of adjacent normal hepatic parenchyma, the surgeon must have precise knowledge of the tumor location relative to the surrounding major vascular and biliary structures. With these factors in mind, image-guided liver surgery aims to enhance the precision of resection, while preserving uninvolved parenchyma and vascular structures [4]. The latest development in medical imaging technology focuses on the acquisition of real-time information and data visualization. Improved accessibility of real-time data can make the treatment faster and more reliable. This access is further enhanced by the introduction of augmented reality (AR) - a fusion of projected computer-generated images and real environment. The main advantage of AR is that the surgeon is not forced to look away from the surgical site as opposed to common visualization techniques [5]. In this way, internal structures computed from pre-operative scans such as tumors and vessels can be superimposed onto the organs to facilitate orientation and navigation during the surgery.

Back in March 2017 the author of this project witnessed a surgical oncology in which the doctor expressed the need of a system to visualize the patient's previous medical imaging real-time. After several meetings between the doctor and the Polytechnic University of Catalonia (PUC), it was decided to begin a project to study the possibility of augmented reality in oncosurgeries. Later on, the PUC contacted the author, which had an interest in the field of augmented reality in medicine.

1.2 Objectives

The main aim of this project is to study the feasibility of using augmented reality in an open liver surgery. For this purpose, a 3D model of an animal's liver from a medical image will be printed and the augmented reality system will be tried on such model.

Thus, several specific objectives need to be achieved. These are the following:

A. Conduction of a medical imaging technique on an animal's liver

A medical imaging technique such as CT or MRI will be conducted on an animal's liver in order to obtain an image of the liver and the hepatic vasculature to develop the posterior models that will be printed.

B. Segmentation of the liver and hepatic vasculature

From the image obtained in A, a model of both the liver and the main hepatic vasculature will be obtained by using a segmentation software.

C. Post-processing of the segmentations

The segmentations will need to be prepared for 3D printing by using different softwares specialized in post-processing. This procedure may include: smoothing the surface, splitting a model into two, increasing the wall thickness and creating a virtual tumor.

D. 3D printing of the models

The model of the tumor, the liver and the vaculature will be printed by using a 3D printer. These models will serve as the "real liver" to which the "virtual liver" will be superimposed.

E. Augmented reality application

Augmented reality will be applied to obtain the model superimposed onto the model's view by means of a marker.

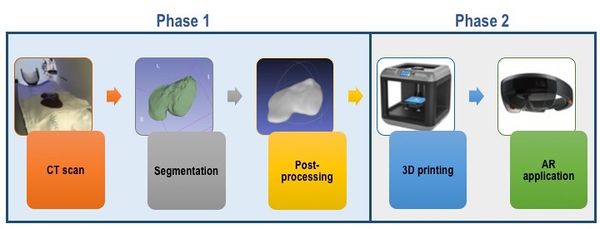

1.3 Methods

The project is divided into 2 phases, each involving different objectives. On the one hand, phase I includes the process of obtaining the image and creating the models to be printed. Thus, during the first phase of the project, objectives A, B and C are involved. On the other hand, phase II includes objectives D and E: the models are printed, assembled and the test of augmented reality is carried out, which is the ultimate objective of the project (Figure 1).

|

| Figure 1. Methodology followed in the present report, involving the two relevant phases. |

With reference to the structure of the present report, after the introduction (in which the objectives of the project are described) there is a section on the background of the field, discussing the presence of augmented reality in the surgical field. Moreover, in the following two sections, market analysis and regulations, the current market sector, its prospects and the legislation related to the product are discussed. In the section of conception engineering, several options for each objective are presented and a final solution is chosen. In the next chapter, detail engineering, such solution is explained thoroughly, detailing the approach followed in the present project as well as the results obtained.

In the following chapter the viability of the product is described by detailing a SWOT analysis as well as mentioning the clinical implementation of the approach. The next two chapter focus on the project budget and it timing. On the final two chapters, it is discussed whether the objectives have been fulfilled or not, how the project can be continued and the relevant conclusions are also presented.

1.4 Scope and span

The scope of this project comprehends the following concepts: liver metastasis, hepatic surgery, liver and vasculature segmentation, 3D printing and augmented reality. All these concepts are related to the application of AR in hepatic oncosurgery.

The span of this project is the field of oncosurgery, surgery applied to patients that suffer from cancer. In particular, the study is meant to cover hepatic oncosurgeries that can be complicated by the reduced vision of the surgeon. With the use of augmented reality during the surgery, the tumor removal could be improved and the damage to secondary vessels reduced, thus increasing the success of the surgery. With this study, the final aim is to create a static model of the vasculature and tumor inside a liver model, which can be superimposed onto the model itself with the use of augmented reality. Applying this possibility in the real world, the idea is that a patient that requires hepatic surgery, first undergoes a medical imaging technique. Then, from the image obtained, a model is generated which contains the accurate location of the vasculature and the tumor. This model is then imported into a head-mounted display (HMD) which allows the surgeon to have an image overlay of the patient's model superimposed onto the patient's view. Ideally, the model should be dynamic and therefore changing as the surgeon resects part of the liver. The visualization of the vasculature and the tumor of the model would allow the surgeon to know exactly where (and where not) to resect, thus, increasing the efficiency of the surgery.

1.5 Location of the project

The project described in this thesis has been developed mainly in the offices of the CIMNE (International Centre for Numerical Methods in Engineering) group, in Barcelona. The author has collaborated with the group located in the first floor of building B0, which contains different offices and a meeting room. The post-processing of the segmentations, the creation of the model of the tumor, the assembly and the application of augmented reality onto the model have been all carried out within the offices.

With regard to the literature review, the writing of the present work and the segmentation of the liver and vasculature, the major part of these tasks has been carried out in the library of Institut Polytechnic de Grenoble, France, due to the temporal stay of the author in the university to finish the studies pursued.

Additionally, the medical imaging technique on the liver was performed at Hospital Clínic, in the Department of Nuclear Medicine and the 3D printing was performed in the offices of the company El Tucán, in Barcelona. Moreover, the surgery witnessed on March 2017, where the project was first originated, took place at Clínica el Pilar, in Barcelona. Finally, another visit to witness a hepatic oncosurgery and talk to another surgeon interested in the project was done at the Institut du Cancer du Montpellier.

2. Background

2.1. General concepts

In the following background section, the aim is to write a review about the area being studied. As a starting point, some important concepts such as liver metastasis, augmented reality and navigation surgery are introduced to enable a better understanding of the whole project.

2.1.1. Liver metastasis

Liver metastasis are cancerous tumors that have spread (metastasized) to the liver from another part of the body. These tumors can appear shortly after the original tumor develops, or even months or years later. Most liver metastasis start as cancer in the colon or rectum. Up to 70% of people with colorectal cancer eventually develop liver metastasis. This happens in part because the blood supply from the intestines is connected directly to the liver through the portal vein (Figure 2) [6]. Colorectal liver metastasis are often found during screening examinations following previous surgery that removed the colon or rectum. Sometimes the cancer is found to have spread to the liver at the same time the colon or rectal cancer is diagnosed.

| Figure 2. Metastatic colon cancer that has spread to the liver [7] |

In order to diagnose liver metastasis, a physician may order several tests such as [7]:

- Blood test: to analyse complete blood count and liver function tests.

- CT scan and/or MRI: to identify the tumors and pinpoint their size in the liver as well as their relation to vascular/biliary structures.

- PET scan: whole body scan to look for evidence of active cancer throughout the body

- Liver biopsy: removal of a small amount of liver tissue by laparoscopy or fine needle aspiration

The treatment for liver metastasis requires a multidisciplinary approach that may involve such approaches as chemotherapy, surgery, interventional radiology and portal vein embolization. It is noteworthy that the removal of the affected part of the liver via surgery is known as liver resection. Only a relatively small number of patients with LM are suitable for surgery, and whether or not this operation is an option for the patients depends on the how much of the liver is affected, the size of the tumor, how well the liver is functioning, the location of the tumor(s) in the liver and the general level of fitness of the patient. However, if surgery is an option, it is the best chance for long-term survival [8]. It is noteworthy that current surgeries involve the observation (by the surgeon) of the medical imaging techniques during the procedure, in an additional monitor. In this way, the surgeon interprets the images, localizes and memorizes the location of the tumor. This means that the success of the intervention is somehow determined to the interpretation skills of the surgeon as well as his/her performance ability.

Augmented reality (AR) is the addition of artificial information to one or more of the senses that allows the user to perform tasks more efficiently. This can be achieved using superimposed images, video or computer-generated models [6]. AR has been implemented in many aspects of modern daily life, such as in automotive navigation systems and smart phone applications (Figure 3).

|

| Figure 3. AR system used in a smartphone game, Pokemon GO |

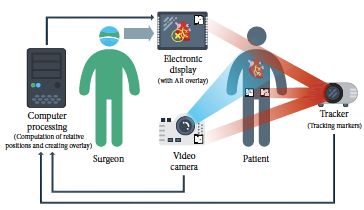

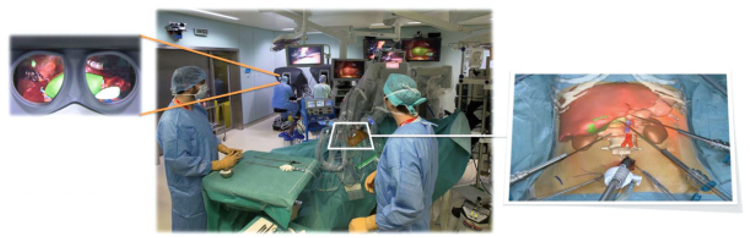

Based on significant improvement of imaging techniques and information technology, the ability of surgeons can be significantly enhanced by integration of pre-existing images on screen during video-assisted procedures. Data of 3D ultrasound, computed tomography (CT), or magnetic resonance imaging (MRI) can be recorded, segmented, and then displayed not only for the purpose of an exact diagnosis but also to augment surgeons’ spatial orientation. Image-guided surgery (IGS) or navigation surgery correlates pre- or intraoperative images to the operative field in real time. IGS aims to increase precision by providing a constant flow of information on a target area and its surrounding anatomical structures [7]. One possibility to provide this information represents the use of augmented reality (AR). Surgeons are constantly faced with the task of mentally integrating two-dimensional radiographs and the three-dimensional surgical field, which is the very reason that augmented reality is so attractive [8]. Figure 4 shows the basic principles of the use of augmented reality in surgery.

| Figure 4. Basic principles of the use of AR in surgery [5] |

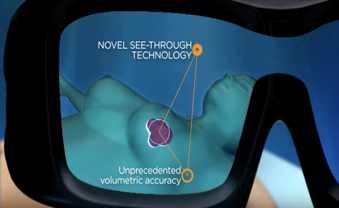

As it is observed, a CG image is superimposed on a real-world imagery captured by a camera and the combination of these is displayed on a computer, tablet PC, or a video projector ("head's up" visualization, see Figure 5). Another possibility is to use a special head-mounted display (HMD, sometimes referred to as “smart glasses”) which resembles eyeglasses ("see-through" display). They use special projectors, head tracking, and depth cameras to display CG images on the glass, effectively creating the illusion of augmented reality. Using a HMD is beneficial as there is almost no obstruction in the surgeon’s view compared to a traditional display; it is not necessary to move the display, and the need of a proper line-of-sight alignment between the display and the surgeon is not as accented [5].

| Figure 5. . See through (top) and head's up (bottom) displays [6] |

AR is especially useful in visualizing critical structures such as major vessels, nerves, or other vital tissues. By projecting these structures directly onto the patient, AR increases safety and reduces the time required to complete the procedure. Another useful feature of AR is the ability to control the opacity of displayed objects. Most HMDs allow the wearer to turn off all displayed images, becoming fully opaque, thus removing any possible distractions in an emergency. Furthermore, it is possible to utilize voice recognition to create voice commands, enabling hands-free control of the device. This is especially important in surgery as it allows surgeons to control the device without the need of assistance or break aseptic protocols. Another interesting option is to use gesture recognition, allowing the team to interact with the hardware even on sterile surfaces or in the air through body movements [5].

2.2. State of the art technology

In this section, the following question will be answered: has AR previously been used in hepatic surgeries? Currently, there are no studies focusing on the application of AR through a HMD in hepatic open surgeries. However, the increasing demand for AR-based systems in minimally-invasive surgeries (MIS) has yielded several studies. Table 1 summarizes the most relevant papers and news from the past seven years.

| Surgery Type / Organ | AR system display | Year | Country | Main author |

| Syngo iPilot AR for MIS (Classical Laparoscopy) / Liver | Head's up | 2011 | Germany | H.G. Kenngott |

| MIS (Robotic) / Liver | Head's up | 2015 | France | D. Notourakis |

| MIS (Robotic) / Liver | Head's up | 2014 | France | P. Pessaux |

| MIS (Robotic and Classical Laparoscopy) / Several | Head's up | 2017 | France | L. Soler |

| Open Surgery / Liver | See through (using a projector) | 2011 | Switzer. | K. Gavaghan |

| Open Surgery / Liver | See through (using an iPad) | 2013 | Germany | Dr. Karl Oldhafer 's team* |

| No surgery - Proof of concept | Head's up | 2013 | France | N. Haouchine |

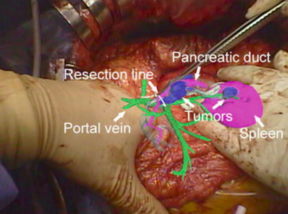

| Open Surgery / Pancreas | Head's up | 2013 | Japan | T. Okamoto |

| Open Surgery / Muscular tumor extraction** | See through (HoloLens™) | 2017 | Spain | Dr. Rubén Pérez 's team* |

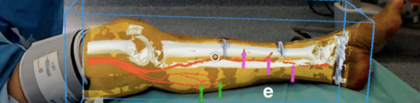

| Open Surgery / Extremity Reconstruction | See through (HoloLens™) | 2018 | UK | P. Pratt |

The first clinical experience of the use AR in a hepatic surgery was performed in 2013 in Heidelberg Germany [9]. In this experience, the authors evaluated the feasibility of a commercially available AR system, the Syngo iPilot AR (Siemens Healthcare Sector, Forchheim, Germany), employing intraoperative robotic C-arm cone-beam computed tomography (CBCT) for laparoscopic liver surgery. After the acquisition of intraoperative CBCT, this software enabled volume registration to generate a real-time overlay of CBCT data on the fluoroscopy, in addition to highlighting important structures and features, such as vessels or resection planes, in order to guide the surgeon. The patient successfully underwent the operation and showed no postoperative complications.

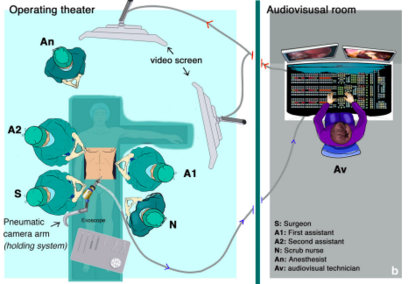

Shortly after that experience, researchers and doctors at the Research Institute against Digestive Cancer (IRCAD, Strasbourg, France) began also to try this new technology in minimally-invasive surgery (MIS). Pessaux et al performed three robotic AR hepatic surgeries [10, 11]. The 3D virtual anatomical model was obtained using a CT scan and customary software (VR-RENDER®, IRCAD). The virtual model was then superimposed to the operative field using and Exoscope (VITOM®, Karl Storz, Tüttlingen, Germany) and a computer scientist manually registered in real-time virtual and real images using a video mixer, based on external anatomical landmarks.

Figure 6 shows the operating room setup: the exoscope with its holding system is installed on the right side of the operative table; the screen showing the AR image is behind the first assistant; the live video signal recorded by the exoscope is transmitted by optic fibers to the audiovisual room where the image processing is done; finally, the augmented reality image is transmitted back to the operating room screen.

| Figure 6. Operation room setup for [10] |

With reference to the results of this study, AR allowed for the precise and safe recognition of all major vascular structures during the procedure. At the end of the procedure, the remnant liver was correctly vascularized and resection margins were negative in all cases [10, 11].

Additionally, in further investigations [12], these researchers improved this system into a two-step interactive augmented reality method. The first step consisted in registering an external view of the real patient with a similar external view of the virtual patients. The second step consisted in real-time positioning and correction of the virtual camera so that it was orientated similarly to the laparoscopic camera (Figure 7). Real video views were provided by two cameras inside the OR: the external real view of the patient was provided by the camera of the shadowless lamp or by an external camera, and the laparoscopic camera provided the internal image. Both images were sent via a fiber-optic network and were visualized on two different screens by the independent operator in the video room. A third screen displayed the view of the 3D patient rendering software working on a laptop equipped with a good 3D graphic card and controlled by the operator. The augmented reality view was then obtained by using a video mixer Panasonic MX 70, offering a merged view of both interactively selected screens. The technique used several anatomical landmarks chosen on the skin (such as the ribs, xiphoid process, iliac crests, and the umbilicus) and inside the abdomen (inferior vena cava and two laparoscopic tools) [12].

|

| Figure 7. Interactive augmented reality realized on the da Vinci robot providing an internal AR view (left) and an external AR view (right) [12]. |

It is noteworthy that the success of this IAR method was tested by the authors on more that 50 surgical oncological procedures for liver, adrenal gland, pancreas and parathyroid tumor resection, including both robotic and classical laparoscopic surgeries.

In contrast to minimally-invasive surgeries, the demand for AR systems in open surgeries is more limited (mainly due to the increased movement of the organ and surrounding structures). Thus, there are limited clinical reports.

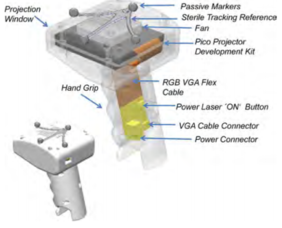

In 2011, Gavaghan et al. developed a portable image overlay projection device for computer-aided open liver surgery (Figure 8). The handheld device was based on miniature laser projection technology that allowed images of 3D patient-specific models to be projected directly onto the organ surface intraoperatively without the need for intrusive hardware around the surgical site. The projector was calibrated using modified camera calibration techniques and images for projection are rendered using a virtual camera defined by the projectors extrinsic parameters. Verification of the device’s projection accuracy concluded a mean projection error of 1.3 mm. Visibility testing of the projection performed on pig liver tissue found the device suitable for the display of anatomical structures on the organ surface. Moreover, the feasibility of use within the surgical workflow was assessed during open liver surgery. It was shown that device could be quickly and unobtrusively deployed within the sterile environment. However, the device presented two main problems: first, it had to be turned off for cooling after approximately 15 min of use due to the inappropriate cooling system; second, the light intensity was clearly a limiting factor because the available maximal intensity of the device was inferior to the one delivered by OR illumination and consequently the OR lights had to be dimmed when the device was being used [13].

| Figure 8. Design of the portable handheld image overlay device (left) and application of the device during the surgery (right) [13] | |

In addition to the use of AR in hepatic open surgeries, in 2013, Karl Oldhafer, a surgeon in Hamburg used a tablet in the operating room to demonstrate a few applications developed by researchers from the Fraunhofer Institute [14]. They used the tablet’s integrated camera to film the liver during the operation (Figure 9). The app was able to superimpose some planning data, including vessels in different colors. However, the user was required to move and turn the device until they manually aligned, with some degree of accuracy, the real liver video with the planning data. Despite the media repercussion, neither Oldhafer nor Fraunhofer published any results so far.

| Figure 9. iPad using AR during open liver surgery [14] |

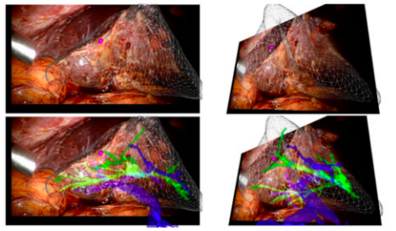

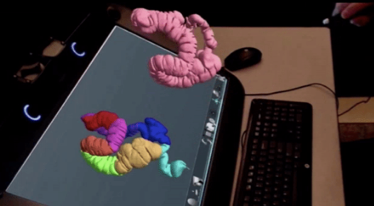

In addition, there are two studies that must be mentioned even though they do not involve directly the use of AR during a liver surgery. First, Haouchine et al developed an image-guided biomechanical model that is able to capture the complex deformations undertaken by the liver during the surgery (mainly caused by the interaction with surgical tools, respiratory motion and heart beating) [15]. A complete experimental bench capable to reproduce as closely as possible a typical scenario encountered during liver tumor resection was designed. Promising results were obtained using in-vivo human liver data and a liver phantom, with internal structures localisation errors below the safety margins actually preserved during the interventions [15]. Figure 10 shows a sequence of images representing the superimposition of the real-time biomechanical model onto the human liver undergoing deformation due to surgical instrument interaction during minimally-invasive surgery.

| Figure 10. Liver represented in a wire-frame, the tumor in purple, the hepatic vein in blue and portal vein in green [15] |

The second study that needs to be mentioned corresponds to that of Onda et al where they focused on the pancreas as the target organ as the intraoperative organ shifting is minimal [16]. The authors used a video see-through display whereby a short rigid stereoscope (developed by them) was used in order to capture the operative field and then preoperative CT images were integrated on the 2D or 3D monitor (Figure 11). The solution they found to organ deformation was the ability to quickly register defined points on the organ by using an infrared marker to co-register fiducial points. They used both soft tissue landmarks as well as blood vessels and found no significant difference in the accuracy of the two methods.

| Figure 11. The images of the scope are displayed on a stereo display monitor (left). AR navigation image during pancreatic surgery of the patient (right) [16] | |

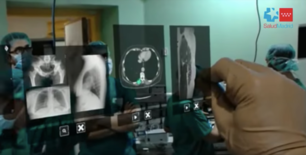

Finally, it is important two experiences that have included the use of HoloLens™ in the operating theatre. The first one, in 2017, a team of surgeons from Gregorio Marañón Hospital teamed up with the Spanish medical tech company Exovite in order to use HoloLens™ to display useful information during a surgery. Such information included a human atlas, previous CT scan data and ultrasound data (Figure 12). The HoloLens™ were used during a muscular tumor resection surgery and the procedure lasted almost half of the time than without using the device [17]. According to those involved, use of the headset reduced the surgery time from around eight hours to something like four and a half—a time saving that reduces the surgeon’s stress levels and ultimately leads to better performance. This experience clearly demonstrated that by providing useful information right in front of a surgeon’s eyes as he or she operates, the accuracy is increased and the procedure time reduced.

| Figure 12. Surgeon using HoloLens™ to display patient information [17] |

Finally, a team of surgeons led by P. Pratt used AR with HoloLens™ to assist the accurate identification, dissection and execution of vascular pedunculated flaps during reconstructive surgery (Figure 13) [18]. In particular, separate volumes of osseous, vascular, skin, soft tissue structures and relevant vascular perforators were delineated from preoperative CTA scans to generate three-dimensional images using two complementary segmentation software packages. These were converted to polygonal models and rendered by means of a custom application within the HoloLens™ stereo head-mounted display. Intraoperatively, the models were registered manually to their respective subjects by the operating surgeon using a combination of tracked hand gestures and voice commands.

| Figure 13. Surgeon using HoloLens™ (right) and overlay vision (left) [18] | |

3. Market analysis

3.1. Market sector

It is important to note that the product addressed in this report, HoloLens™, cannot be considered a medical device since it does not follow the CE definition of medical devices: "any instrument, apparatus, appliance, software, material or other article, whether used alone or in combination, […], intended by the manufacturer to be used for human beings for the purpose of: diagnosis, prevention, monitoring, treatment, […] and which does not achieve its principal intended action in or on the human body by pharmacological, immunological or metabolic means, but which may be assisted by such means” [19]. Thus, even though HoloLens™ can be used to assist during a surgery, it cannot be considered a medical device since it was not intended by the manufacturer to be used for human beings for any of the purposes mentioned above. However, since the device is intended to be used in a hospital, it can be stated that the principal market sector to which this product is targeted is the healthcare sector.

3.2. Examples found in the sector

In the healthcare sector, several AR devices have been developed (Table 2).

| Company | Product name | Application |

| Royal Phillips | - | Spinal surgery navigation |

| Medsights Tech | - | Tumor reconstruction for surgical planning |

| EchoPixel | True 3D | Surgical planning and training |

| 3D4Medical | Esper Project | Surgical training |

| AccuVein | AccuVein | Vasculature visualization |

| Aira | Aira | Assistant for the visually impaired |

| Orca Health | EyeDecide | Assistant for the visually impaired |

First, Royal Phillips announced last year that they are developing a AR-based system for navigation surgery of spinal cord. This technology (Figure 14), which is currently being developed, will aid surgeons in both minimally-invasive and open surgeries [20]. Moreover, company Medisghts Tech has developed a software to test the feasibility of using AR to create accurate 3-dimensional reconstructions of tumors. The complex image reconstructing technology basically empowers surgeons with x-ray views – without any radiation exposure, in real time. It has been designed to be intuitive for practitioners of various fields, including technicians, surgeons, and other medical specialists. It has also been tested for other, such as skin and subcutaneous, head and neck, GI tract, endocrine and other retroperitoneal pathologies [21]. Along this line, EchoPixel's True 3D system uses a wide variety of current medical image datasets to enable radiologists, cardiologists, pediatric cardiologists, and interventional neuroradiologist to see patient specific anatomy in an open 3D space. This highly innovative technology enables physicians to envision key clinical features and helps in complex surgical planning, medical education or diagnostics [22]. In addition, 3D4 Medical Labs has created the yet-to-be-released “Project Esper,” a tool that takes learning the intricacies of the human anatomy to an entirely new level. In what the company calls “immersive anatomical learning,” users can interact intuitively with different models, bringing textbook content to life. The concept is ideal for training future surgeons so they can visualize and interact with specific parts of the body before operating on a live person [23].

| (A) | (B) |

|

|

| (C) | (D) |

| Figure 14. AR applications in the healthcare market (I): (A) Royal Phillips[20]. (B) MedSights Tech[21]. (C) EchoPixel[22]. (D) 3D4Medical [23] | |

Other relevant applications include:

- AccuVein

AccuVein uses AR by using a handheld scanner that projects over skin and shows nurses and doctors where veins are in the patients’ bodies [24, 25].

- Aira

Aira offers solutions to the visually impaired to create the possibility to live a more independent life. The device uses deep learning algorithms for describing the environment to the user, read out text, recognize faces or notify about obstacles. Using a pair of smart glasses or a phone camera, the system allows an Aira “agent” to see what the blind person sees in real-time, and then talk them through whatever situation they’re in [26].

- EyeDecide

EyeDecide, a patient engagement app (from Orca Health), uses AR to help patients with vision problems describe their symptoms and better understand their condition. It also allows ophthalmologists to model patients’ potential long-term effects of negative lifestyle choices on their eyesight, encouraging them to be more proactive with their healthcare in the present [27].

3.3. Future perspectives of the market

The feasibility of using augmented reality has been demonstrated by and ever-increasing number of research projects. Without a doubt, augmented reality will influence health care in many ways: from how medical students learn about the human body, to consults with doctors in remote places via conference chat, to less-invasive surgeries that enable physicians to “look” into a patient without making an incision. On the one hand, current projects involving augmented reality are focused on assisting surgeons to perform more effective and efficient procedures. On the other hand, virtual reality applications are being developed in order to treat patients that suffer from important mental and behavioural health problems. By creating virtual environments, conditions such as post-traumatic stress disorder (PTSD), schizophrenia, anxiety and eating disorders can be treated [28].

With reference to the future, the market is witnessing an increase in private investments by various investors, which is expected to boost growth further. As a matter of fact, the global augmented reality & virtual reality in healthcare market is expected to reach USD 5.1 billion by 2025 according to a new report by Grand View Research, Inc. [29].

4. Regulation and legislation

As this project is a proof of concept, no applicable regulations have been taken into account when developing it. As mentioned before, the device used in this project, HoloLens™, cannot be considered a medical device per se. Moreover, as it is commercially available, it counts with the required European and Spanish certifications. Looking at the future prospects of the project, when the device is used in the operating theatre, it does not produce any additional risks since it does not interact with the patient.

As for the softwares used for segmentation and post-processing of the models, as they were used with animal data, no regulations were taken into account either. However, for future continuation of the project, when human data is used, the confidentiality aspects will have to be taken into account: according to the Spanish law, "Ley Orgánica 15/1999, de 13 de diciembre, de Protección de Datos de Carácter Personal", the identity of patients cannot be revealed. Thus, the CT scans will have to be saved anonymously. The law mentioned is specific of the UNE (Asociación Española de Normalización or, by its acronym, Una Norma Española), a Spanish organization dedicated to the dedicated to the development and diffusion of technical regulation in Spain for ensuring quality and safety in processes, products and services [30].

As it will be explained later in section 10, the immediate future of this project involves the continuation of the AR application. However, it is noteworthy that, in a further future, an automatic segmentation software could be developed. Were this software to be commercialized, it would be mandatory for this product to meet certain requirements and directives starting with the ones of the European Community. The level of severity of the evaluation for obtaining sale approval would depend on the classification of the medical product. In other words, the device classification would determine the applicable routes for establishing conformity with European Directives. Based on the MEDDEV guidelines included on the EU Directives on medical devices, it would be possible to select the classification of the medical device intended to place into market. The classification criteria are set out in Annex IX of the Directive 93/42/EEC regarding medical devices [31].

5. Conception Engineering

In this section, possible solutions that could be used to fulfil the objectives are presented. After the study of the viability of such options, a final solution is proposed.

5.1. Solutions studied

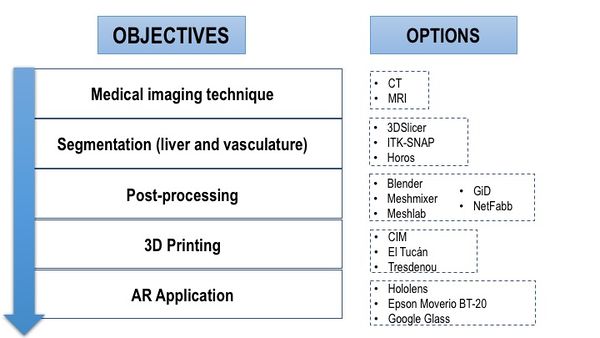

The project presented must fulfil the objectives presented in the Objectives section (2.1). For each objective, several options have been considered (Figure 15).

|

| Figure 15. Objectives and options considered |

5.1.1. Medical imaging technique

For the medical imaging technique, two relevant options are discussed: Computer Tomography (CT) and Magnetic Resonance Imaging (MRI).

On the one hand, a CT scan (Figure 16, left) creates a cross sectional, 3D image of the body. The scan gives detailed pictures of the tumour(s) and surrounding tissues and organs, enabling the doctors treating you to gain an accurate picture of the tumour, and its location. On the other hand, MRI (figure 16, right) uses magnetic and radio waves (not X-rays) to show the tumour(s) in great detail and look at the blood supply to the liver. Also, for both CT and MRI, the patient may be given an injection of a contrast medium during the scan in order to produce a better image [32].

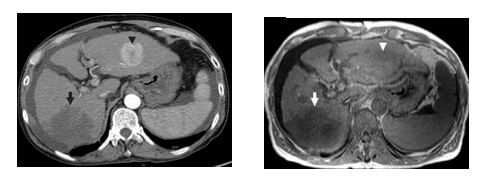

| Figure 16. Medical imaging techniques applied to the abdominal field (axial view): CT (left) and MRI (right) [29] |

5.1.2. Segmentation

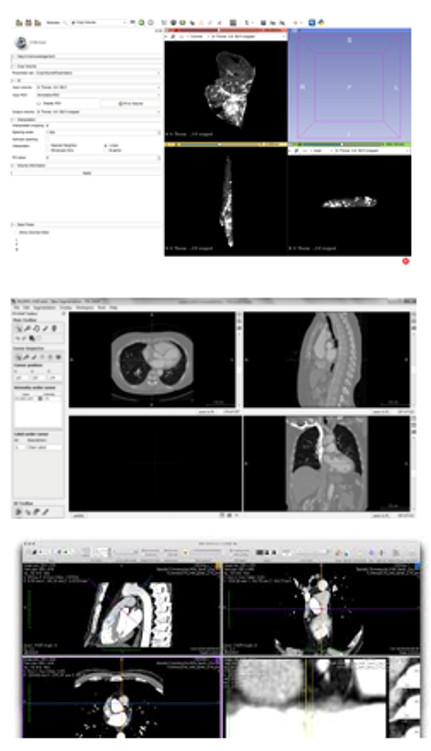

In order to carry out the segmentation, three relevant options were studied: 3D Slicer, ITK-Snap and Horos. Typical user interface of the three applications can be seen in Figure 17.

|

| Figure 17. User interfaces (from top to bottom): 3D Slicer, ITK-Snap and Horos |

3D Slicer is a software package for visualization and image analysis [34]. It is not intended for clinical use but only for research purposes. Software is more of general purpose based on core modules and additional extending modules. 3D Slicer is provided as free, open-source. 3D Slicer reads versatility of medical data including DICOM. The user interface is strongly user dependent but it can be organised in typical transversal, sagittal, coronal and 3D view. Moreover, it includes a Python interpreter for direct interaction with Python language. Software distributions are available for all major platforms (Windows, Linux, OS X).

ITK-Snap [35] is a software application used to segment structures in 3D medical images. It provides semi-automatic segmentation using active contour methods, as well as manual delineation and image navigation. ITK-Snap uses semi-automatic segmentation method based on active contour, which uses the level set method. The object of interest which is be segmented is first pre-processed and then covered by an active contour of a ball shape in initialization phase. This contour then evolves to the shape of the segmented object. The application offers import of several medical data format including DICOM. The user interface is straight forward and consists of typical transversal, sagittal, coronal and 3D view. The software is distributed for all major platforms; Windows, Linux and OS X.

CIMNE has an own-code/technologies for image processing [59]. CIMNE has design of an efficient methodology for pre and post-processing of medical images to generate computational meshes for numerical simulations using MATLAB [60]. Finally, Horos [36] is a free, open-source medical image viewer. It is an image processing software and it is specially designed for images in DICOM format and for platform OS X. This software is based upon OsiriX (an image processing software) and other open-source medical imaging libraries.

5.1.3. Post-processing

For the preparation of the models to be 3D printed, many softwares are available (Table 3).

| Software | Developer | Comment |

| Blender

[37] |

Blender Foundation | Blender is a free and open-source 3D animation suite. It supports the entirety of the 3D pipeline—modeling, rigging, animation, simulation, rendering, compositing and motion tracking, even video editing and game creation.

|

| Meshmixer

[38] |

AutoDesk Inc. | Described as “Swiss Army Knife” for 3D meshes, Meshmixer is a state-of-the-art software for editing triangle meshes. The software has mesh repair capabilities but also functions like scultping, hollowing, scaling, mirroring, cutting and building support structures making it invaluable for 3D printing. Best of all, Meshmixer is free and available for Windows, OS X, and Linux. |

| Meshlab

[39] |

ISTI - CNR research center | Meshlab is an open-source system for processing and editing 3D triangular meshes. It provides a set of tools for editing, cleaning, healing, inspecting, rendering, texturing and converting meshes. It offers features for processing raw data produced by 3D digitization tools/devices and for preparing models for 3D printing. |

| GiD

[40] |

CIMNE | GiD is a CAD system that features the widely used NURBS surfaces (trimmed or not) for geometry definition. A complete set of tools is provided for quick geometry definition and edition including typical geometrical features such as transformations, intersections or Boolean operations. |

| NetFabb

[41] |

AutoDesk Inc. | Netfabb is 3D print preparation tool that offers a wide range of pre-print features such as repairing meshes, adjusting wall thickness, reducing file size, merging or subtracting parts. The software also has a powerful function to create, analyse and adjust supports for 3D prints. |

5.1.4. 3D printing

Since the project's ultimate goal is to apply AR to the model, there was no interest in printing the models with a high quality or a specific material as long as it presented the correct form in a rigid material. Thus, the only issue taken into account when choosing how to print the models was the price. Several companies and centres based in Barcelona were contacted in order to obtain information on the price of the printing and the final list was reduced to three options (Table 4).

| Centre | Address |

| CIM centre (UPC) | C/ Lllorens i Artigas 12, 08028 Barcelona |

| El Tucán | C/ Berguedà 7, 08029 Barcelona |

| Tresdenou | C/ Pujades 184, 08005 Barcelona |

5.1.5. AR application

As it was mentioned before, the application of augmented reality is carried out using a wearable see-through technology and not a video (head's up) screen. In this way, three different head-mounted display devices have been considered (Figure 18). For each device, the specifications are listed in Table 5 [6].

| Device | Specifications |

| Microsoft HoloLens™

[42] |

Windows 10, proprietary Microsoft Holographic Processing Unit, Intel 32 bit processor, 2 MP camera, four microphones, 2 GB RAM, 64 GB flash, Bluetooth, Wi-Fi |

| Epson Moverio BT-20

[43] |

0.3 MP camera; Bluetooth 3.0; 802.11 b/g/n Wi-Fi; around 6 hours of battery life; 8 GB internal memory; built-in GPS, compass, gyroscope and accelerometer |

| Google Glass

[44] |

5 MP camera with 720p video; Bluetooth; 802.11 b/g Wi-Fi; around 1 day of “typical use” on battery; 12 GB usable memory |

| Figure 18. HMD devices. From left to right: Microsoft HoloLens™ [42], Epson Moverio BT-20 [43] and Google Glass [44] | ||

5.2. Solution proposed

Table 6 summarizes the solution proposed. It is noteworthy that all the decisions were taken based on three main aspects: literature review (previous works), advice from expert advisors and costs.

| Imaging Technique | Computer Tomography |

| Segmentation software | 3D Slicer |

| Post-processing sofwares | Meshlab, Meshmixer and GiD |

| 3D Printing | El Tucán |

| AR application | HoloLens™ |

For the medical imaging technique, the computer tomography was chosen for two main reasons. First, CT scans are widely used for detecting liver metastases and have been previously used in several studies over MRI [16, 45]. Secondly, the cost of a CT scan is generally lower than that of an MRI. It must be also added that the difference in resolution between MRI and CT were not taken into account as this project represents a proof of concept and therefore, no high-quality images are needed as long as the vasculature is visible for segmentation.

With reference to the segmentation software, the three softwares considered are open-source and include functions to carry out the segmentation in a semi-automatic way. After downloading and trying the three softwares, it was found that 3D Slicer was more user-friendly that the other two softwares. Moreover, on the internet, far more tutorials on 3D Slicer are found than for Horos and ITK-Snap. This would later shorten the learning period of the software. With reference to the literature, it is also true that 3D Slicer has been proved to carry out better semiautomatic segmentations than other softwares including ITK-Snap and OsiriX (on which Horos is based) [46] It must be added that the author of the present project contacted the author in [45] and the latter recommended using 3D Slicer too for the wide range of segmentation functions if offers.

As for the post-processing softwares, three different were chosen: GiD, MeshLab and MeshMixer. Blender and NetFabb were discarded for being less user-friendly. The three softwares selected included functions that allowed carrying out all the different steps in the post-processing (see section 6.3). However, some steps were found to be more easily carried out using one software than the other, thus, the three were chosen as for each post-processing step the best software was used.

The 3D printing decision was based solely on pecuniary reasons. The total cost for the printing of the models (tumor, vasculature and liver parts) was the least in El Tucán company, which was chosen. Finally, the HMD device chosen was HoloLens™ as it is currently considered to be the most suitable AR devices for surgical practice [17].

6. Detail engineering

In this section, the proposed solution is presented in depth.

6.1. CT

The liver used from this project was bought in a local butcher shop and belonged to a lamb. The CT scan was performed at the Nuclear Medicine Department in Hospital Clínic, Barcelona (Figure 19) using a SOMATOM definition AS (SIEMENS). Due to the state of the animal's liver, a first trial of the scan failed since the vasculature was indiscernible. Thus, the vasculature had to be filled with a mixture of vaseline and Ultravist 300mg/ml, which is an injectable contrast medium (a dye) containing iodine. The mixture was warmed up in order to ensure a semi-liquid texture that would fill the whole vasculature. High resolution CT image data (image size 512 x 512 px, slice thickness 0.6mm) of the animal's liver was saved as Digital Imaging and Communications in Medicine (DICOM) data.

| Figura 19. Liver CT scan. (A) Introduction of the mixture of vaseline and the dye. (B, C) Liver position during the scan. (D) CT scan computer window |

6.2. Segmentation

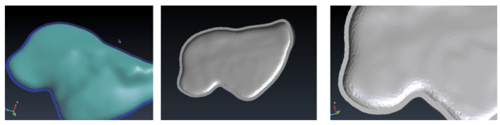

Prior to the actual segmentation of the images and post-processing of the segmented models, there was a period of self-learning. In this period, human DICOM images were downloaded from the internet and several segmentations of the liver were carried out in order to get to know all the functions of the open-source software 3DSlicer. Moreover, the resulting segmented models were also post-processed in several ways in order to get to know the post-processing softwares. During this period, several tutorials were watched in order to obtain information on the softwares [47-56].

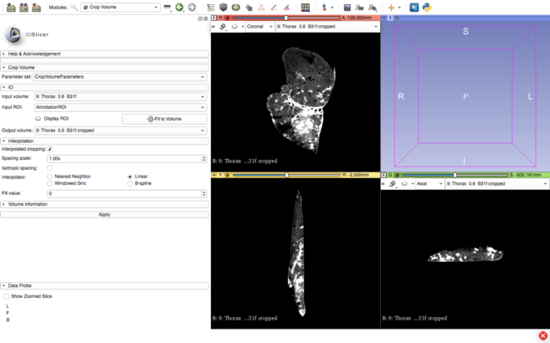

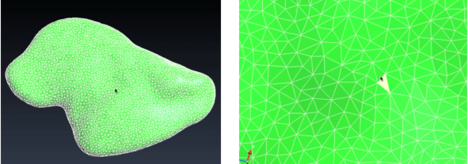

After the self-learning period, the actual segmentation of the animal's liver was carried out. The images were imported into 3DSlicer software (Figure 20) and the segmentation of anatomical structures (liver and vasculature) was performed in a semi-automatic approach with pre-built functions available in the software.

| Figure 20. 3DSlicer window with imported DICOM data |

After using a region-growing, threshold-based algorithm, regions of interest (ROIs) were manually evaluated and corrected (Figure 21). Parameter for segmentation algorithms were chosen manually. Following segmentation, a 3D surface rendering was performed to verify segmented structures, and the desired models were exported as a mesh-type, stereolithographic (STL) files.

| Figure 21. 3DSlicer segmentation: vasculature (top) and liver (bottom) |

It is noteworthy that the basal part of the vasculature was created in order to place the marker, required for the AR application.

6.3. Post-processing

The post-processing of the models involved modifications on the structures in order to enable the proper 3D printing of such. The creation of the tumor was also included in this step.

6.3.1. Vasculature and tumor models

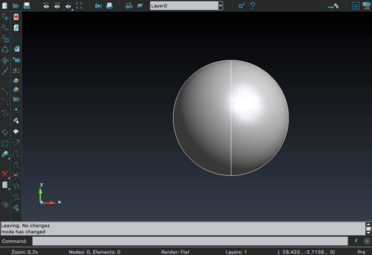

For the creation of the tumor, GiD 13.0.4 was used. For simplification, the shape of the tumor was chosen to be a sphere (Figure 22). The size (radius=10 GiD units) was designed to be the maximum possible as long as it fit inside the liver model.

|

| Figure 22. Creation of the spherical tumor with GiD |

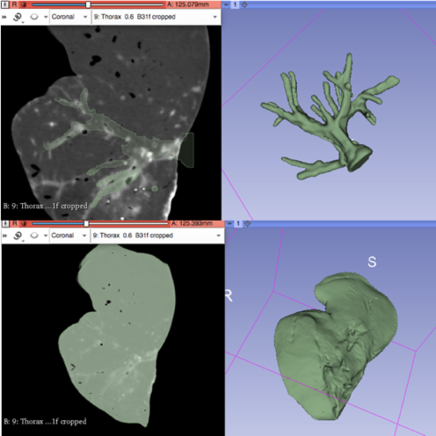

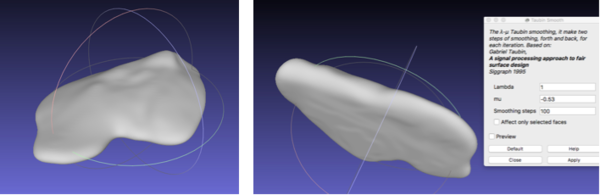

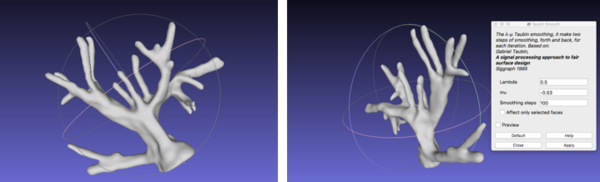

With reference to the post-processing of the vasculature, the STL file was imported onto Meshlab. The process that was performed involved filtering the model with a Taubin Smoothing Algorithm to remove segmentation artefacts [57] (Figure 23).

|

| Figure 23. Smoothed vasculature with Meshlab |

6.3.2. Liver model

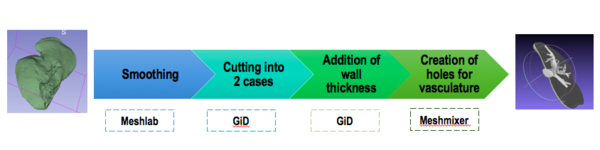

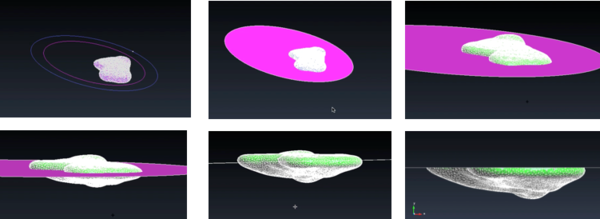

For the liver model, several post-processing steps had to be taken (Figure 24).

|

| Figure 24. Steps for post-processing of the liver model |

First, the same smoothing procedure was carried out as for the vasculature: Taubin filter was used to remove artefacts (Figure 25).

| Figure 25. Smoothed liver with Meshlab |

Next, the model had to be cut into two parts. The goal of this step was to 3D print a model in which the top part can be removed in order to see the vasculature and tumor location inside. For this step, GiD was used as the software allows to cut the model simply by transforming it from a mesh to geometry (Figure 26). The geometrical domain was created in a series of layers where each one was a separate part of the geometry. In this way, it was possible to view and manipulate some layers and not others.

|

| Figure 26. Visualization of the model as geometry in GiD |

Next, a circular geometrical plane was created and placed in the centre of the liver model (Figure 27) in order to cut it. The function "Intersection geometries" was chosen, cutting the liver model in two parts.

|

| Figure 27. Generation of the two parts of the liver model |

Once the two parts were generated, it was necessary to add thickness to the wall of such parts as usually 3D printers require a minimum of 1mm wall thickness in order to print models. The thickness was created with GiD, using the function "Offset" and it was chosen to be 3mm in order to ensure a correct printing (Figure 28).

|

| Figure 28. Visualization of the 3mm wall thickness |

Finally, in order to do the hole from where the main vessel comes out of the liver, Meshmixer was used. In particular, the function of "Boolean difference" was used (Figure 29). This function recognizes the intersection of two different meshes and deletes it.

| Figure 29. Creation of the hole in one of the parts using Meshmixer |

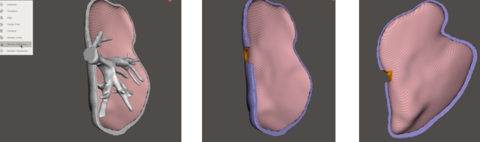

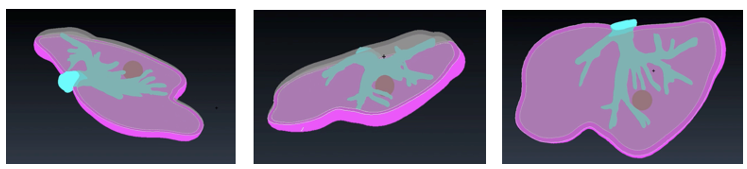

Figure 30 shows different views of the final assembly of the four different models ready to be printed: tumor, vasculature, top liver parts and bottom liver part.

|

| Figure 30. Final models |

6.4. 3D printing

The models were all printed at El Tucán company (Carrer del Berguedà, 7, 08029 Barcelona) with a CraftBot 3D printer Plus (CraftUnique Ltd). This printer (Figure 31) has a resolution of 100micron/layer and uses the material PLA (polylactic acid). The estimated total time of the printing of the four models was 23 hours.

| Figure 31. CraftFot 3D Printer Plus |

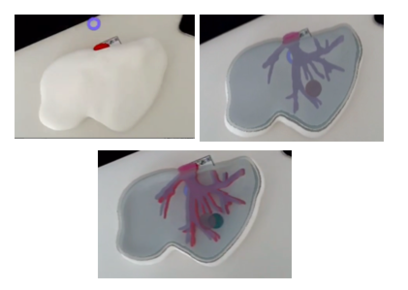

Figure 32 shows the result of the printed models.

|

| Figure 32. Printed models |

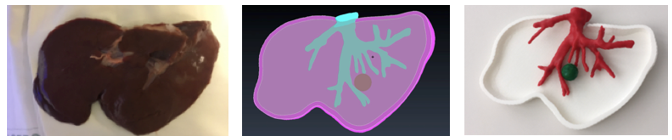

As it is observed in Figure 33, the printed liver correctly corresponds in shape to the animal's liver, indicating that the steps in the post-processing of the model resulted in optimised anatomical representations, increasing the performance of the HoloLens™ application while minimising loss of model precision.

|

| Figure 33. Comparison: animal's liver, segmented and printed model |

6.5. AR with HoloLens™

The HoloLens™ is, essentially, a head-mounted Windows 10 device. Thus, a Dell XPS 15 computer was used, which includes Windows 10pro with 32G RAM memory and card NVIDIA GFORCE 1050 (Pascal). For the application of augmented reality with HoloLens™, two main tools are required: a game engine, which is the software development environment and a software development kit (SDK), which consists of a set of tools that allows the creation of the AR application.

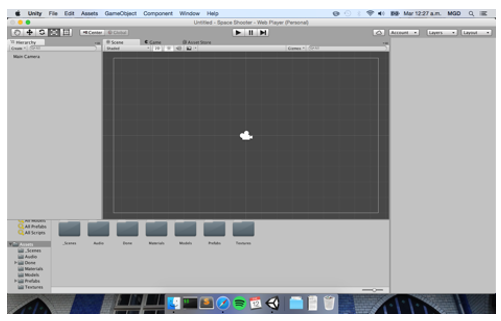

In this project, the open-source game engine Unity (version 2017.1, Unity Technologies, San Francisco, CA, USA) was used (Figure 34). This game engine supports all the major AR/VR platforms and simplifies the process for deploying applications for different platforms. Moreover, by using Unity the process is simplified as Microsoft developed the HoloToolkit repository, which contains a collection of useful scripts and components for HoloLens™ development for Unity.

|

| Figure 34. Game engine Unity interface |

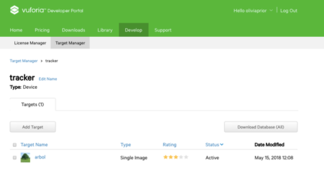

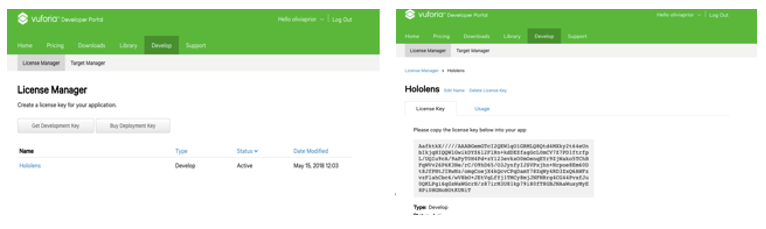

As for the software development kit, the open-source Vuforia SDK was used. Vuforia acts like a library for AR and once this library is imported onto Unity, it works like an asset and all the resources for AR can be used, such as marker recognition and camera tracking. In order to use this SDK, Vuforia requires to create an account and obtain a License Key. Vuforia offers three options for License Keys: development, consumer and enterprise. The first option is free and can be used for applications currently under development, consumer is for applications that are ready for publishing and enterprise is distributed for the use by employees of a company using Vuforia. Therefore, in the present project, a development License Key was obtained. The generated License Key was added into the Vuforia configuration in Unity project, named "HoloLens™" (Figure 35).

|

| Figure 35. Acquisition of the License Key from Vuforia |

After creating the License Key, a new database could be set. In this database, the corresponding marker that would afterwards be used was stored (Figure 36). The marker acts like an image target: when the HoloLens™ recognize the marker, the model generated is displayed. Usually, markers are squared 2D images printed on paper and placed on a flat surface. For this project, the marker shown in figure 36 was created by means of adding two images: a QR image extracted from the internet and the UB logo.

| Figure 36. Marker used |

The image target was uploaded onto Vuforia. Vuforia uses a star rating for defining the quality of the uploaded target. The star rating determined if the target was well suitable for detection and stable tracking (Figure 37).

| Figure 37. Upload of sample marker onto Vuforia |

Next, the Vuforia extension was downloaded into the Unity project from the Asset Store. Asset Store is created by Unity Technologies and the members of the community. It is a library of assets including anything from textures or models to a project examples and plugins [58]. The most important component of the extension is the ARCamera, which acts as a camera view of the device or which the application is built for. The ARCamera was the first thing that required to be set up by choosing Digital Eyewear configuration.

After, two other extensions were added. First, MainCamera was added to the scene as HoloLens™ needs it for tracking the users head and for stereoscopic rendering, which means that instead of rendering only a single image it creates two, one for each eye, and enables to see the image in stereo. MainCamera was positioned in origin (head position). Second, InputManager was added in order to include the gestures input, required for the use of HoloLens™. Finally, slight changes in the Build and Player settings took place in order to improve the quality of the image and the velocity performance.

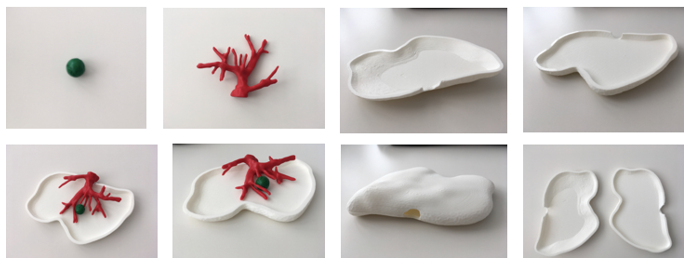

Once the setup was done, the model and the marker were imported onto Unity and the scale and size of such were corrected in order to ensure a correct alignment with the real model (Figure 38).

|

| Figure 38. Different views from the marker and models in Unity |

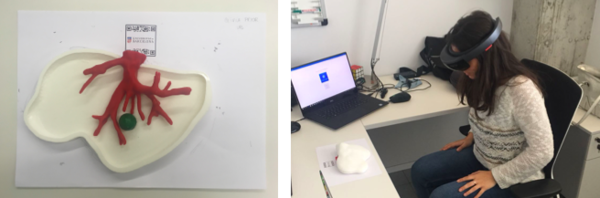

The configured project was then deployed directly into HoloLens™ using Holographic Remote Player (the computer running the Unity project and HoloLens™ were connected to the same WiFi network). The marker was printed and pasted onto a blank page. The model was placed in the same positon as in the Unity project and the HoloLens™ were tested (Figure 39).

|

| Figure 39. Testing the HoloLens™: model and marker (left), user (right) |

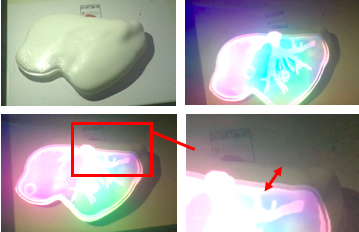

6.6. Results

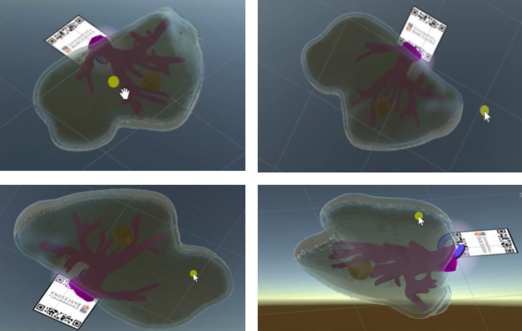

The device clearly recognizes the marker, determines the position, scale and orientation of the model with respect to the camera and displays it. The accuracy of image registration was subjectively evaluated by checking the alignment of visible structures, by increasing and decreasing the degree of transparency of the superimposed virtual model. Figure 40 shows the result of the first experience.

| Figure 40. Results first experience |

It is noteworthy that the low quality of the images is due to the fact that it is not possible to observe real-time what the user is observing using the HoloLens™ since the device uses the same camera to observe the model and to record what the user is observing. Thus, the only two options available in order to see the observation are:

1. Observe real-time (poor image resolution)): placing the mobile phone behind the HoloLens™ (Figures 40 and 41).

2. Observe with a slight delay (better image reoslution): use the same camera that the device is using to observe the model (Figure 42).

From figure 40 it is observed that in the first experience there was a slight error in the alignment. This was due to an incorrect scaling of the marker and the model in Unity. After several experiences, this was corrected and the final results can be seen in figure 41- From the image, we can see that the AR system delivers a good accuracy, and the purple model completely covers the liver model's surface, with and without the top part of the model. Since the model comes from the CT scan of the exemplar, the virtual and real objects can completely be registered to each other. It is also observable that the display is a translucent system, allowing the user to view both the real and virtual worlds.

| Figure 41. Final results |

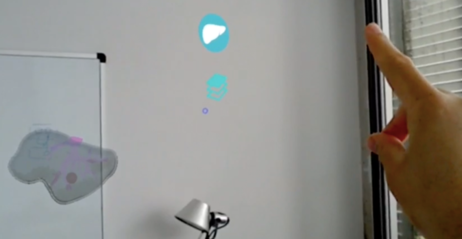

From Figure 41 it can be concluded that the AR setup is successful as the location of the tumor and vasculature can be correctly determined. It must be added that after the first experiences, three further improvements were made to the Unity project:

1. Addition of two buttons: the user can "press" them with a gesture, enabling the visualization of the vasculature and/or liver.

2. Voice recognition: by saying "model" the user can choose whether to see or not the model superimposed onto the printed liver.

3. Extended tracking: extended tracking utilizes features of the environment to improve tracking performance and sustain tracking even when the target is no longer in view. In this way, the user can see the model once the marker is recognized but afterwards, without the necessity of the marker. This is possible with the SDK Vuforia that was used for the project. Vuforia uses other information from the environment to infer the target position by visually tracking the environment. Vuforia builds a map around the target specifically for this purpose and assumes that both the environment and target are largely static.

The buttons and the extended tracking can be seen in Figure 42, where the model of the liver can also be regarded even though the marker is not present.

| Figure 42. Extended tracking and buttons |

Figure 43 shows the HoloLens™ display with and without the top part of the printed liver. Even though the resolution is better than in figures 40 and 41, there is a slightly inaccurate alignment of the model and the printed liver since the same camera for observation and recording was used.

| Figure 43. HoloLens™ display recorded with the same camera as the one used in model observation |

To sum up, the results clearly demonstrate that the location of the tumor and vasculature can be determined using the AR setup developed. These results can be categorized as significant as they suggest that AR could assist the accurate identification and resection of tumors during open hepatic surgeries.

7. Technical viability

This section is focused on analysing how viable and useful is the proof of concept carried out. The advantages and problems related to the possibility of incorporating this idea in a hospital will be explained in the form of a SWOT analysis (strengths, weaknesses, opportunities and threats).

7.1. SWOT analysis

Table 7 summarizes the SWOT analysis of the project.

| HELPFUL | HARMFUL | |

| INTERNAL

ORIGIN |

Strengths

1. No complex phases required in the creation of the system 2. Gesture and voice command recognition 3. Easy to use 4. Potential uses for the 3D model: surgical training and presurgical planning.

|

Weaknesses

1. Registration accuracy between real and virtual models 2. Static virtual model

|

| EXTERNAL

ORIGIN |

Opportunities

1. Surgeon not forced to look away from patient 2. Increase surgical precision and patient safety |

Threats

1. Cost 2. Surgeon's acceptance (HoloLens™ comfortability) |

The first thing to take into account is the fact that this project is a proof of concept, thus, it was carried out to test whether the approach of applying augmented reality during an oncosurgery is feasible. In this line, it was demonstrated that the idea represents a promising solution to current challenges faced by surgeons.

With reference to this last point, the external factor that gives this approach the opportunity to be implemented in a hospital lies within the current problem surgeons have when operating on metastatic livers. Currently, a surgeon has to continually look away from the patient to see the CT scan or MRI of the patient on a nearby monitor. They are also hampered by occlusions from instruments, smoke and blood. In this way, AR has the potential to solve these problems, which were the main motivation of the problem. The approach developed would give surgeons more information during the surgery, guiding them and allowing them to make better decisions during the procedure, consequently increasing patient safety.

As for the strengths of the project, the setup of the project does not require complex phases: once it is known how to use the softwares used for segmentation and post-processing, it is not difficult to carry out the tasks. Even building the AR application with Unity is fairly easy. Moreover, the use of HoloLens™ presents an extremely useful feature: its hand-free nature. Since surgeons will likely have their hands full during a procedure, they simply need to make vocal commands to bring up different pieces of information. The approach followed even allows the users to move the visual information in front of them by simply moving their heads. Thus, it can be stated that the application is easy to use. Furthemore, the creation of the virtual patient 3D model has on its own several advantages: it can be used to plan the surgical strategy before the procedure, contributing to the reduction of morbidity by providing a better image of the internal liver anatomy; it also has the possibility to be used in surgical training.

Yet, a few limitations remain to be solved. One of the main weaknesses of the project is the accuracy, which was measured subjectively. In the present AR system, if the augmented content were superimposed in the wrong position then the clinician could be misled and cause a serious medical accident. For instance, inaccurate tumor location could lead to the excision of an excessive amount of normal parenchyma in an effort to obtain a complete resection or, even worse, could result in positive margins. Thus, a more thorough measurement of the registration accuracy between the real and virtual models should be carried out in the future. A second important weakness if the use of a fixed virtual model. If the current approach was to be implemented, the virtual model would represent a static shot of the patient's anatomy. This would limit AR accuracy since the liver is highly deformable tissue.

Finally, external factors that could be detrimental to the approach's implementation must be also

discussed. The first threat is related to the cost: even though the approach is feasible and could improve many surgical procedures, the HoloLens™ are expensive and could be a reason for many hospitals to reject the idea. Secondly, some surgeons could be against the implementation of the idea because of the comfortability of the headset. The HoloLens™ might be fine for an hour, but wearing it for five hours of intense surgery reportedly puts a bit of strain on the wearer's head.

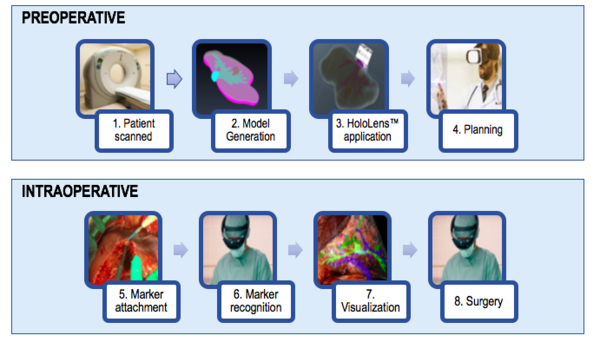

7.2. Clinical implementation

As it has been previously mentioned, the current approach could be useful for surgical training as well as preoperative planning. However, once the model is improved and the virtual model is dynamic (providing accuracy even when the liver is deformed during surgery) it can be clinically tested. Figure 44 shows the workflow that would be followed.

In the preoperative phase, the patient would first be scanned between three to one days before the surgery. From the images, the model would be generated. This step includes the segmentation and the post-processing of the segmented model. Thirdly, the model would be deployed onto the HoloLens™, and the display could be used in order to plan the surgery.

In the intraoperative phase, the surgeon would first attach the marker to the patient. Then, once the marker is recognized, the surgeon would be able to visualize the liver's vasculature and with that new information would proceed to perform a safer and more precise surgery.

| Figure 44. Workflow diagram showing the processes involved in AR assisted surgery |

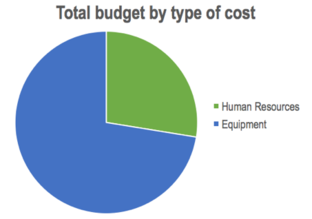

8. Project Budget

In this section, all the theoretical costs related to the project explained in this report are going to be enumerated and classified in two different ways: by type of cost and by type of activity. It must be added that the period of project initiation (March 2017 - January 2018) in which several meetings, a trip and research information was carried out, has not been taken into account for the total budget.

8.1. By type of cost

The following tables contain the costs for both human resources and equipment (Tables 8 and 9, respectively), as well as the total cost of the project (Table 10).

| Human Resources | |||

| Agent | Cost (€) / Hour | Hours | Cost (€) |

| Biomedical Engineering Student | 4'5 | 300 | 1350 |

| Expert advisor in AR | 20 | 30 | 600 |

| Expert advisor in GiD | 35 | 10 | 350 |

| TOTAL | 2300 | ||

| Equipment | |

| Equipment | Cost (€) |

| Animal liver | 7 |

| 15' CT scan with contrast | 228* |

| 3D printing of the models | 110 |

| 1 Macbook Air computer | 899 |

| 1 Dell XPS 15 computer | 1500 |

| Hololens™ Development Edition | 3299 |

| Microsoft | Free (under UB identification) |

| 3D Slicer | Free |

| Meshlab | Free |

| MeshMixer | Free |

| GiD | Free (for 1 month trial) |

| Unity | Free |

| TOTAL | 6043 |

- The total cost of the medical imaging technique includes 222€ for the use of the CT scan with contrast and 6€ for the purchase of vaseline, needed for this project.

| Total Budget | |

| Section | Cost (€) |

| Human Resources | 2300 |

| Equipment | 6043 |

| TOTAL | 8343 |

| Figure 45. Overall costs of the project by type of cost |

As it is observed, almost 75% of the total cost was spent in the equipment, leaving a little more than 25% for human resources. This is mainly due to the high cost of the equipment (in particular, the Hololens™ device) and the few participants of the project.

8.2. By activity

It is also interesting to analyse the total budget with reference to the activites carried out. Tables 11-16 summarize the costs of the 5 activities related to the objectives (see section 2.1) as well as the writing of the thesis.

| A. Medical imaging technique | |

| Human resources and equipment used | Cost (€) |

| 4 hours Biomedical engineering student | 18 |

| Animal liver | 7 |

| 15' CT scan with contrast | 228 |

| TOTAL | 253 |

| B. Segmentation | |

| Human resources and equipment used | Cost (€) |

| 100 hours Biomedical engineering student | 450 |

| 1 Macbook Air computer | 899 |

| 3D Slicer | Free |

| TOTAL | 1349 |

| C. Post-processing | |

| Human resources and equipment used | Cost (€) |

| 80 hours Biomedical engineering student | 360 |

| 10 hours expert advisor in GiD | 350 |

| 1 Macbook Air computer | - |

| MeshLab | Free |

| MeshMixer | Free |

| GiD | Free |

| TOTAL | 710 |

| D. 3D Printing | |

| Human resources and equipment used | Cost (€) |

| 3 hours Biomedical Engineering student | 13.5 |

| 3D Printing of the models | 110 |

| TOTAL | 123.5 |

| E. AR application | |

| Human resources and equipment used | Cost (€) |

| 40 hours Biomedical Engineering student | 180 |

| 30 hours expert advisor AR | 600 |

| 1 Dell XPS 15 computer | 1500 |

| Hololens™ Development Edition | 3299 |

| Unity | Free |

| TOTAL | 5579 |

| F. Writing of the thesis | |

| Human resources and equipment used | Cost (€) |

| 73 hours Biomedical Engineering student | 328.5 |

| 1 Macbook Air computer | - |

| TOTAL | 328.5 |

It is noteworthy that the cost for the Macbook air computer has not been included in activities C (post-processing) and F (writing of the thesis) as its price was accounted for in activity B (segmentation) and the computer used for the three activities was the same.

| Total Budget | |

| Activity | Cost (€) |

| A. Medical imaging technique | 253 |

| B. Segmentation | 1349 |

| C. Post-processing | 710 |

| D. 3D Printing | 123.5 |

| E. AR application | 5579 |

| F. Writing of the thesis | 328.5 |

| TOTAL | 8343 |

It can be seen that the 3D Printing and Writing of the thesis activities are not present in the pie chart as their costs are too small compared to the others. Again, AR application receives the vast majority of the budget, due to the Hololens ™ device and the Dell computer. However, this graph would be slightly different if the Macbook Air computer cost was included in activities C and F: their section in the graph would increase significantly.

All in all, the total budget of the project does not exceed 10.000€ and therefore, it cannot be categorized as an expensive project. This budget analysis is useful to observe where costs should be reduced if the project continuation.

9. Discussion and future research

This section consists on the description of the degree of fulfilment of all objectives, followed by the continuation of the project.

9.1 Degree of fulfilment of the objectives

All five objectives (see section 1.2) have been achieved. However, some have posed more challenges than others. Trying to fulfil the first objective "conduction of a medical imaging technique on an animal's liver", the first challenge arose: the liver's vasculature was not visible in the medical images and there was no blood in them. A solution was found (using a mixture of vaseline and contrast) and the objective was fulfilled with no other problems.