(Created page with " ==Abstract== The purpose of this study was to evaluate two versions of a programmed instruction training program designed to teach undergraduate college students a goal-dir...") |

m (Scipediacontent moved page Draft Content 171769670 to Weatherly et al 2015a) |

(No difference)

| |

Latest revision as of 15:06, 28 March 2017

Abstract

The purpose of this study was to evaluate two versions of a programmed instruction training program designed to teach undergraduate college students a goal-directed systems approach to analyzing organizational systems (Malott & Garcia, 1987). The first version was a paper-based programmed instruction module that had previously been shown to be effective at training the basic knowledge of the concepts, however was ineffective at training the application of these concepts. A computer-based programmed instruction (CBPI) version was created to improve the application of these concepts, which was tested through a series of three open-ended posttests with increasingly explicit prompts for each successive test. The results of the study showed higher performance results for the CBPI versions across all three dependent variables. The results of a nonparametric global test showed a standardized effect size of .86 and a p-value of <.001.

Resumen

El propósito de este estudio fue evaluar dos versiones de un programa de instrucción programada diseñado para enseñar a estudiantes no graduados una aproximación al diseño de sistemas dirigidos a metas para analizar sistemas organizacionales (Malott & Garcia, 1987). La primera versión consistió en un modulo de un programa de instrucción personalizada de papel que anteriormente había probado ser efectivo para enseñar conocimientos básicos de conceptos, pero que no obstante era ineficiente para entrenar en la aplicación de dichos conceptos. Se creó una versión computarizada de instrucción programada (CBPI) para mejorar la aplicación de los conceptos, la cual se probó a través de una serie de tres post-tests con respuestas libres que incluyeron pistas cada vez más explícitas en cada prueba sucesiva. Los resultados del estudio mostraron una mejor ejecución en las tres variables dependiente cuando se usaron las versiones del CBPI. Los resultados de una prueba no paramétrica global mostraron un tamaño del efecto estandarizado de .86 y un valor p <.001.

Keywords

Computer-based Instruction ; E-Learning ; Instructional design ; Performance management ; Programmed instruction ; Student training

Palabras clave

Instrucción Computarizada ; E-aprendizaje ; Diseño Instruccional ; Administración de la Ejecución ; Instrucción Programada ; Entrenamiento a Estudiantes

Since 2000, there have been numerous attempts at improving educational systems through legislative programs such as the No Child Left Behind Act of 2001, school finance reform, and increased accountability for teacher performance (Superfine, 2014 ). Unfortunately, the quality and efficacy of these programs is often interpreted from lagging indicators of success such as graduation rates, or an overgeneralization of standardized test scores. Omitted are the key behaviors and leading indicators of success that are critical when tracking progress and systematically improving performance deficits. Efforts to improve educational practices and outcomes are often based on assumptions that money, curriculum materials, facilities, and regulation cause learning without an understanding of the variables responsible for change (Cohen, Raudenbush, & Ball, 2003 ). However, resources alone have proven ineffective at maximizing learning and performance without an understanding of the contingencies surrounding learning and an application of sound behavior-analytic techniques.

In 1968, Skinner’s seminal text The Technology of Teaching described the need for a behavioral analysis of educational practices and the importance of applying the principles of behavior to improve educational systems. Over the years, decades of research and practice have built an empirically proven behavior-analytic technology that can be used to impact all levels of these systems, from the students through the teachers and administrators. One of the greatest contributions behavior analysis has offered in this area has been programmed instruction, a behavior-analytic technology that is the cornerstone needed when blending the principles of behavior into the steady advancements of computer-based educational technologies.

Programmed instruction (PI) is a teaching method based on behavior-analytic research and principles of behavior such as feedback, prompting, shaping, and discrimination training (Jaehnig & Miller, 2007 ). Programming is referred to by Skinner (1963) as “the construction of carefully arranged sequences of contingencies leading to the terminal performances which are the object of education” (p. 183). Computer-based instruction (CBI) provides a continuously evolving technology that offers an efficient way of achieving the behavior-analytic standards of programmed instruction. Computer-based or web-based training can offer a wide array of stimulus-presentation modes and programming options that can improve instruction by making careful and specific programming possible (Clark, 1983 , 1985 , 1994 ; Kozma, 1994 ; Tudor & Bostow, 1991 ). Keys to success include interactivity, a central component of CBI, that involves the user of an instructional program making a response in order to advance through the program (Chou, 2003 ; Kritch & Bostow, 1998 ), and feedback provided contingent upon an overt response made during the training (Bates, Holton, & Seyler, 1996 ; Fredrick & Hummel, 2004 ; Jaehnig & Miller, 2007 ). With the exponential advancements seen in the area of computer technology and the widespread application of electronic resources into educational systems, computers are being used more than ever as a viable educational tool that can be effective at all levels of training.

Computer-based instruction has been proven effective across a number of training areas directly relevant to educational systems including training classroom teachers to interact with parents (Ingvarsson & Hanley, 2006 ) and training college students on computer software use (Karlsson & Chase, 1996 ). This technology has also been effectively used to train the concepts and principles of behavior analysis (Miller & Malott, 1997 ; Munson & Crosbie, 1998 ; Tudor, 1995 ). Given the importance of integrating behavior-analytic tools into educational methods, it is of value to staff to understand the principles of behavior so they can properly apply these principles to their teaching techniques.

When compared with traditional lecture-based instruction, programmed instruction has been reported to be more effective (Chatterjee & Basu, 1987 ; Daniel & Murdoch, 1968 ; Fernald & Jordan, 1991 ; Kulik, Cohen, & Ebeling, 1980 ) and produce more rapid acquisition (Fernald & Jordan, 1991 ; Hughes & McNamara, 1961 ; Jamison, Suppes, & Wells, 1974 ; Kulik, Kulik, & Cohen, 1980 ) than traditional instruction. However, other comparisons of programmed instruction and traditional forms of instruction have produced mixed results (Bhushan & Sharma, 1975 ; Kulik, Schwalb, & Kulik, 1982 ), possibly as a result of discrepancies in the design of the instruction (Jaehnig & Miller, 2007 ). Several meta-analyses on the effectiveness of programmed instruction have indicated that programmed instruction continues to improve due to persistent advancements in programming technology (Hartley, 1978 ; Kulik, Cohen, & Ebeling, 1980 ; Kulik, Schwalb, & Kulik, 1982 ), but there is a continued need for additional data in this area. The purpose of this study was to assess the efficacy of programmed instruction modules by experimentally evaluating two types of programmed instruction modules, paper-based programmed instruction and computer-based programmed instruction (CBPI).

Method

Participants and setting

This study involved three groups of undergraduate college students enrolled in a behavior analysis course over a three-semester period. The first group contained 19 students, the second group contained 32 students, and the final group contained 45 students. The primary researcher provided all posttests and any instructional materials that were not provided as part of the department’s course materials the students purchased at the beginning of the course. The student participants completed the instructional materials on their own time, in accordance with the course syllabus for the semester.

Goal-Directed Systems Design

The current programmed instruction module used in the course was a paper-based module that was previously created, evaluated, and revised to teach the concept of goal-directed systems design ( Malott & Garcia, 1987 ). Goal-directed systems design is a way of analyzing the structure of an organization in terms of the inputs, processes, and desired outputs that can be viewed at all levels within the system. Training college students to effectively analyze and improve the respective system they will end up working in was a curriculum goal identified by the university. Historically, a quiz was given to students after they finished the paper-based programmed instruction, using questions almost identical to those provided in the instructional module. The quiz consisted of one fill-in-the-blank question and 11 multiple-choice questions. Although these quiz data were reportedly high (approximately 92% accuracy across a six-year period), instructors also reported that the students consistently had difficulty applying the concepts once the training module was completed. For example, class discussions and the final course project centered around giving the students a sample organizational scenario with the expectations that the students could complete an Input-Process-Output model as instructed in the goal-directed systems design paper-based programmed instruction. There are limited data on generalization and response induction in the areas of programmed instruction and computer-based instruction, including the ability to vocalize and apply concepts taught textually (Ingvarsson & Hanley, 2006 ; Tudor & Bostow, 1991 ). For these reasons, evaluating the efficacy of paper-based vs. computer-based programmed instruction on the generalized application of the principles learned in the programmed instruction modules was prioritized for this study.

Data Collection – Dependent Variables

For the purpose of this study, three posttests were given to assess the application of the concepts taught in the training modules. These tests provided the opportunity to apply goal-directed-systems design concepts to the same problem across all three tests, with increasingly explicit prompts for each successive test. Given the previous success of the paper-based programmed instruction in training the basic knowledge of the goal-direct systems design concepts, the primary dependent variables for the current study were the percentage of questions answered correctly on these three posttests designed to test the application of the concepts. Each student received these three posttests in succession after completing their respective programmed-instruction module.

Posttest scoring criteria

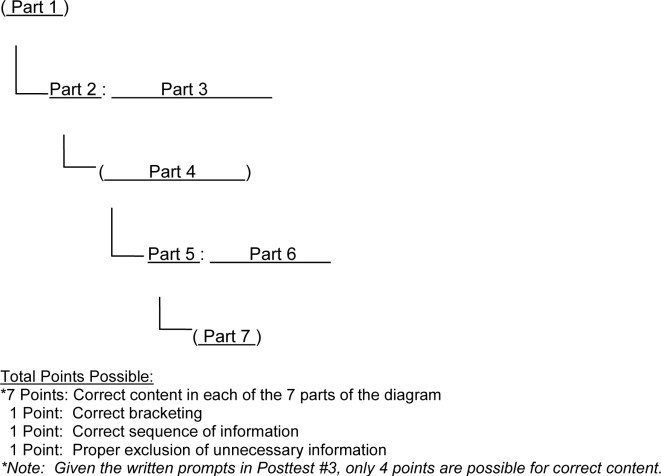

In order to properly test the validity of the first, second, and third posttests, grading criteria were created to score those specific tests. There were four main areas selected by the researchers and instructors to be evaluated in order to properly assess the applied knowledge the students acquired by using the programmed instruction. These areas of evaluation were as follows: 1. Correct bracketing of the Input-Process-Output diagram; 2. Correct sequence of information provided in the diagram; 3. Proper exclusion of unnecessary information as part of the diagram (e.g., improperly placing “customers” as a main resource in the diagramming); 4. Correct information provided within the diagram.

Posttest #1 offered an opportunity for responding to all of the target areas by providing a written example of a process within an organization (a recycling plant), along with a blank space for the student to provide their own Input-Process-Output diagram. For each test, one point was awarded for each component of the diagram that was correctly provided by the participant, allowing for a total of seven points for properly providing all components (see Figure 1 ). One point was awarded for correct bracketing of the entire diagram and one point for having the correct sequence of information throughout the diagram. One point was also awarded for proper exclusion of unnecessary information from the diagram.

|

|

|

Figure 1. Posttest Scoring Criteria |

Independent Variables

Paper-based programmed instruction

The first training condition consisted of a phase in which the undergraduate students were only provided with the paper-based programmed instruction. Prior to the current study, this instruction was the primary training tool used to train the goal-directed systems design unit. The 54-page paper-based programmed instruction included written descriptions of concepts and applied examples, 20 practice activities located throughout the instruction that contained questions pertaining to these concepts, and one set of review questions at the end of the instruction. Each question provided the user with an opportunity to write a response (multiple-choice answer, provide a diagram, fill-in-the-blank), which could then be verified by turning to the answer page immediately following each activity. In addition, 12 of the 20 activities provided detailed feedback along with the correct answer for the questions. During this training condition, students were assigned the paper-based programmed instruction and given the three posttests during the next seminar. The primary researcher administered all tests.

Computer-based programmed instruction

The improvement conditions consisted of providing students with the computer-based programmed instruction (CBPI) that was created as a possible replacement for the paper-based instruction previously used. The initial CBPI version contained the same examples and covered all content areas as identified in the paper-based version. Just as with the paper-based programmed instruction condition, students were assigned the CBPI during the goal-directed systems design unit and given the three posttests during the next seminar. The primary researcher administered all tests.

The training components that are essential to effective CBPI, such as interactivity and contingent feedback, were included in the components of the program. The program was created in Microsoft® PowerPoint® using text, graphics, and animations, and included an interactive response-requirement from the user, which involved opportunities for overt responses with feedback. Feedback was presented contingent on the response, which has been shown to be superior as compared to programs in which the feedback information can be viewed either non-contingently or after an arbitrary response (Anderson, Kulhavy, & Andre, 1971 , 1972 ). These interactive components involved multiple-choice questions, although the CBPI also required constructed responses directly produced by the students that were turned in to the course instructor during the seminar following the completion of the program. The participants received feedback on their constructed responses during seminar. The CBPI also contained navigational aids that allowed participants to access definitions, tables and diagrams, and any sections that the participants wished to review throughout the instruction.

The first version of CBPI contained 348 total frames (slides) that included training and activity components taken directly from the paper-based version. It was designed with a table of contents that allowed for navigation to various practice activities, training sections, and review aids such as definitions, charts, and diagrams. Each user was required to make an overt response on their computer in order to progress through the instruction. During each practice activity, the user was not offered the opportunity to advance to a subsequent question/section until the correct response was made.

After the first CBPI implementation, error analyses were conducted to assess questions in need of improvement, just as they had been when developing the paper-based version of the programmed instruction prior to the current study. Based on the data from the error analyses and feedback received from the users, a number of improvements were made prior to the next CBPI implementation. Detailed feedback was added to all of the practice questions that had limited feedback (an approximately 36% increase in the amount of feedback) and a review section was added at the end of the instruction. A revised applied activity was also added at the end of the program, along with a number of cosmetic improvements including the addition of more color and animation. The final CBPI version contained a total of 438 frames, which included 138 instructional frames, 260 practice activity frames, and 34 review frames along with the table of contents.

Procedures and Design

The three programmed instruction modules were administered separately across three semesters. During the first semester, the group of students (N = 19) received the computer-based programmed instruction. The group in the second semester (N = 32) received the previously developed paper-based programmed instruction and the group in the third semester (N = 45) received the final version of the CBPI. A posttest only design was used for the three posttests due to open-ended nature of these tests, which was designed to assess proper application of the goal-directed systems design concepts. The posttests were administered during the first class that followed the completion of the respective training program. Once an instructional module was assigned to a semester, all students enrolled in the course completed only that assigned module. The three posttests were used across all three semesters.

Results

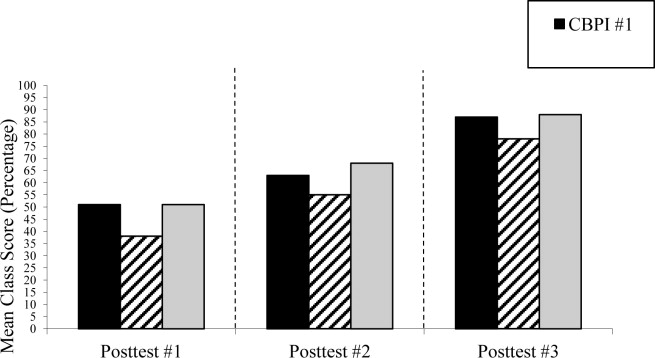

When compared with the paper-based programmed instruction, CBPI produced greater performance results across all three posttests, with performance increasing as the explicit prompts were added for each successive posttest (see Figure 2 ). The first version of the computer-based programmed instruction (N = 19) resulted in a posttest #1 mean score of 51%, a posttest #2 mean score of 63%, and a posttest #3 mean score of 87%. During the subsequent semester, students (N = 32) were given the paper-based programmed instruction resulting in a posttest #1 mean score of 38%, a posttest #2 mean score of 55%, and a posttest #3 mean score of 78%. The final version of computer-based instruction was administered to students (N = 45) the next semester, resulting in a posttest #1 mean score of 51%, a posttest #2 mean score of 68%, and a posttest #3 mean score of 88%.

|

|

|

Figure 2. Mean differences in posttest scores across the three different programmed-instruction conditions. Both CBPI versions produced greater performance results across all three posttests when compared with the paper-based programmed instruction, with performance increasing as the explicit prompts were added for each successive posttest |

Our primary objectives for this study were to continue improving the training of the goal-direct systems design concepts and to place a larger emphasis on the application of these concepts as tested through posttests #1, #2, and #3. Given this goal, a nonparametric global test (O’Brien, 1984 ) was used to compare the three dependent variables (i.e., posttest #1, #2, and #3) between all semesters that used the computer-based versions of the programmed instruction and the semester that used the paper-based version. The results of the global test showed a larger, more statistically significant effect in the mean scores of the students in the computer-based programmed instruction groups as compared to the paper-based group. The standardized effect size was .86 and the p -value was < .001. Interobserver agreement (IOA) was assessed using the point-by-point agreement formula {# of agreements / (# of agreements + disagreements) × 100%} for all dependent variables. IOA was evaluated for 53% of all posttest evaluations across the three separate implementations, resulting in 92% agreement. It should be noted that the quizzes used to assess basic knowledge of the material as part of the course requirements maintained at approximately 92% accuracy across the duration of the study, with the final version of the CBPI resulting in 93% performance accuracy.

Discussion

The purpose of the current study was to evaluate the effectiveness of a computer-based programmed instruction (CBPI) version of a previously developed paper-based programmed instruction that trained undergraduate students in the concept of goal-directed systems design. A 54-page paper-based programmed instruction module was used to create a CBPI version that involved 438 frames and provided approximately 90 minutes of training. The results of the study showed that converting a well researched and designed paper-based programmed instruction module into CBPI produced a more effective training program that can be easily accessed in an increasingly popular computer-based medium. The study also showed that CBPI can be designed to appropriately impact the generalized application of the concepts taught in the instructional program, an important element of any training program.

Comments and survey data from students showed preference for computer-based instructional methods, particularly commenting on the feedback, entertainment, and educational value provided with CBPI, and the stepwise delivery of the content in both the paper-based and computer-based versions of programmed instruction. Based on user feedback, future versions of the instruction should assess less lengthy versions or effective ways to break-up the training components to allow for breaks in the training. Future research could also assess the amount of transfer to applied settings produced with CBPI. Given the limited data in this area and the high social validity for this type of behavior-analytic technology this could be a valuable contribution to the field. A constraint found within the PowerPoint® program was the limited data-collection capabilities for this programming tool. Future CBPI programs should consider a platform that can accommodate tools that automatically record user responses, such as SCORM (Sharable Content Object Reference Model) or Tin Can API. Using an authoring tool that incorporates this feature, along with the other important features of programmed instruction, could provide an efficient training platform for computer-based instruction.

With the continued advancements in computer technologies and authoring tools, computer-based instruction can greatly benefit settings with limited resources and large receiving systems. Computer-based instruction provides an ideal platform for behavior-analytic standards, allowing for proper manipulation of instructional antecedents, high rates of responding, contingent consequences, and an array of data collection and performance tracking options. The empirically-supported benefits demonstrated by the improved student performance resulting from the CBPI used in the current study shows CBPI to be a very efficient and effective training option over traditional training methods. Given the trends in web-based learning and the ever-present need for effective training solutions, CBPI can be a valuable tool that can impact any system and all areas of training and should continue to be examined and improved upon for future applications.

References

- Anderson et al., 1971 R.C. Anderson, R.W. Kulhavy, T. Andre; Feedback procedures in programmed instruction; Journal of Educational Psychology, 62 (1971), pp. 148–156

- Anderson et al., 1972 R.C. Anderson, R.W. Kulhavy, T. Andre; Conditions under which feedback facilitates learning from programmed lessons; Journal of Educational Psychology, 63 (1972), pp. 186–188

- Bates et al., 1996 R.A. Bates, E.F. Holton, D.L. Seyler; Principles of CBI design and the adult learner: The need for further research; Performance Improvement Quarterly, 9 (1996), pp. 3–24 III

- Bhushan and Sharma, 1975 A. Bhushan, R.D. Sharma; Effect of three instructional strategies on the performance of B.Ed. student-teachers of different intelligence levels; Indian Educational Review, 10 (1975), pp. 24–29

- Chatterjee and Basu, 1987 S. Chatterjee, M.K. Basu; Effectiveness of a paradigm of programmed instruction; Indian Psychology Review, 32 (1987), pp. 10–14

- Chou, 2003 C. Chou; Interactivity and interactive functions in web-based learning systems: A technical framework for designers; British Journal of Educational Technology, 34 (2003), pp. 265–279

- Clark, 1983 R.E. Clark; Reconsidering research on learning from media; Review of Educational Research, 53 (1983), pp. 445–459

- Clark, 1985 R.E. Clark; Evidence for confounding in computer-based instruction studies: Analyzing the meta-analyses; Educational Communication and Technology Journal, 33 (1985), pp. 249–262

- Clark, 1994 R.E. Clark; Media will never influence learning; Educational Technology Research and Development, 42 (1994), pp. 21–29

- Cohen et al., 2003 D.K. Cohen, S.W. Raudenbush, D.L. Ball; Resources, instructions, and research; Educational Evaluation and Policy Analysis, 25 (2003), pp. 119–142

- Daniel and Murdoch, 1968 W.J. Daniel, P. Murdoch; Effectiveness of learning from a programmed text covering the same material; Journal of Educational Psychology, 59 (1968), pp. 425–431

- Fernald and Jordan, 1991 P.S. Fernald, E.A. Jordan; Programmed instruction versus standard text in introductory psychology; Teaching of Psychology, 18 (1991), pp. 205–211

- Fredrick and Hummel, 2004 L.D. Fredrick, J.H. Hummel; Reviewing the outcomes and principles of effective instruction; D.J. Moran, R.W. Malott (Eds.), Evidence-based educational methods, Elsevier Academic Press, San Diego (2004), pp. 9–22

- Hartley, 1978 S.S. Hartley; Meta-analysis of the effects of individually-paced instruction in mathematics; Dissertation Abstracts International, 38 (1978), p. 4003

- Hughes and McNamara, 1961 J.L. Hughes, W. McNamara; A comparative study of programmed and conventional instruction in industry; Journal of Applied Psychology, 45 (1961), pp. 225–231

- Ingvarsson and Hanley, 2006 E.T. Ingvarsson, G.P. Hanley; An evaluation of computer-based programmed instruction for promoting teachers’ greetings of parents by name; Journal of Applied Behavior Analysis, 39 (2006), pp. 203–214

- Jamison et al., 1974 D. Jamison, P. Suppes, S. Wells; The effectiveness of alternative instructional media: A survey; Review of Educational Research, 44 (1974), pp. 1–67

- Jaehnig and Miller, 2007 W. Jaehnig, M.L. Miller; Feedback types in programmed instruction: A systematic review; The Psychological Record, 57 (2007), pp. 219–232

- Karlsson and Chase, 1996 T. Karlsson, P.N. Chase; A comparison of three prompting methods for training software use; Journal of Organizational Behavior Management, 16 (1996), pp. 27–44

- Kritch and Bostow, 1998 K.M. Kritch, D.E. Bostow; Degree of constructed-response interaction in computer-based programmed instruction; Journal of Applied Behavior Analysis, 31 (1998), pp. 387–398

- Kulik et al., 1980 J.A. Kulik, P.A. Cohen, B.J. Ebeling; Effectiveness of programmed instruction in higher education: A meta-analysis of findings; Educational Evaluation and Policy Analysis, 2 (1980), pp. 51–64

- Kulik et al., 1980 C.L.C. Kulik, J.A. Kulik, P.A. Cohen; Instructional technology and college teaching; Teaching of Psychology, 7 (1980), pp. 199–205

- Kulik et al., 1982 C.C. Kulik, B.J. Schwalb, J.A. Kulik; Programmed instruction in secondary education: A meta-analysis of findings; Journal of Educational Research, 75 (1982), pp. 133–138

- Kozma, 1994 R.B. Kozma; Will media influence learning? Reframing the debate; Educational Technology Research and Development, 42 (1994), pp. 7–19

- Malott and Garcia, 1987 R.W. Malott, M.E. Garcia; A goal-directed model for the design of human performance systems; Journal of Organizational Behavior Management, 9 (1987), pp. 125–129

- Miller and Malott, 1997 M.L. Miller, R.W. Malott; The importance of overt responding in programmed instruction even with added incentives for learning; Journal of Behavioral Education, 7 (1997), pp. 497–503

- Munson and Crosbie, 1998 K.J. Munson, J. Crosbie; Effects of response cost in computerized programmed instruction; The Psychological Record, 48 (1998), pp. 233–250

- O’Brien, 1984 P.C. O’Brien; Procedures for comparing samples with multiple endpoints; Biometrics, 40 (1984), pp. 1079–1087

- Skinner, 1963 B.F. Skinner; Reflections on a decade of teaching machines; Teachers College Record, 65 (1963), pp. 168–177

- Skinner et al., 1968 B.F. Skinner; The technology of teaching, Appleton-Century-Crofts, New York (1968)

- Superfine, 2014 B.M. Superfine; The promises and pitfalls of teacher evaluation and accountability reform; Richmond Journal of Law and the Public Interest, 17 (2014), pp. 591–622

- Tudor, 1995 R.M. Tudor; Isolating the effects of active responding in computer-based instruction; Journal of Applied Behavior Analysis, 28 (1995), pp. 343–344

- Tudor and Bostow, 1991 R.M. Tudor, D.E. Bostow; Computer-programmed instruction: The relation of required interaction to practical applications; Journal of Applied Behavior Analysis, 24 (1991), pp. 361–368

Document information

Published on 28/03/17

Licence: Other

Share this document

Keywords

claim authorship

Are you one of the authors of this document?