| (31 intermediate revisions by 3 users not shown) | |||

| Line 6: | Line 6: | ||

<div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | <div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | ||

| − | LIN Zehong<sup>1,*</sup> | + | LIN Zehong<sup>1,*</sup> WANG Shuai<sup>2 </sup>Zhang Xiaoxian<sup>3 Zhang Guijie<sup>2 ZHOU Xueyan<sup>1 </sup></div> |

<div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | <div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | ||

| Line 17: | Line 17: | ||

<span style="text-align: center; font-size: 75%;">3.</span> <span style="text-align: center; font-size: 75%;">School of Computer Technology and Engineering, Changchun Institute of Technology, Changchun, China</span></div> | <span style="text-align: center; font-size: 75%;">3.</span> <span style="text-align: center; font-size: 75%;">School of Computer Technology and Engineering, Changchun Institute of Technology, Changchun, China</span></div> | ||

| − | <span id='OLE_LINK11'></span> | + | <span id='OLE_LINK11'></span>--> |

| − | --> | + | ==Abstract== |

| − | + | The influence of users on online Forum should not be simply determined by the global network topology but rather in the corresponding local network with the user’s active range and semantic relation. Current analysis methods mostly focus on urgent topics while ignoring persistent topics, but persistent topics often have important implications for public opinion analysis. Therefore, this paper explores key person analysis in persistent topics on online Forum based on semantics. First, the interaction data are partitioned into subsets according to month, and the Latent Dirichlet Allocation (LDA) and filtering strategy are used to identify the topics from each partition. Then, we try to associate one topic with the adjacent time slice, which fulfills the criterion of having high similarity degree. On the basis of such topics, persistent topics are defined that exist for a sufficient number of periods. Following this, the paper provides the commitment function update criteria for the persistent topic social network (PTSN) based on the semantic and the sentiment weighted node position (SWNP) to identify the key person who has the most influence in the field. Finally, the emotional tendency analysis is applied to correct the results. The methods in real data sets validate the effectiveness of these methods. | |

| − | =1. | + | '''Keywords:''' Online Forum, persistent topic, key person, social network analysis |

| + | |||

| + | ==1. Introduction== | ||

As an electronic information service system on the Internet, an online Forum provides a public electronic forum on which each user can post messages and put forward views [1]. Online Forum gathers many users who are willing to share their experiences, information and ideas, and a user can browse others’ information and publish his/her own to form a thread through a unique registration ID [2]. | As an electronic information service system on the Internet, an online Forum provides a public electronic forum on which each user can post messages and put forward views [1]. Online Forum gathers many users who are willing to share their experiences, information and ideas, and a user can browse others’ information and publish his/her own to form a thread through a unique registration ID [2]. | ||

| − | + | Social network analysis (SNA) [3] can help us obtain the implicit characteristics of the users and information dissemination in a numerical manner. The forum topics are mainly divided into two categories: (1) emergency topics, which are characterized by a short duration with intense discussion; (2) persistent topics, characterized by long duration, typically closely related to one’s livelihood. Most studies have focused on the former, such as researching the discovery and prediction of online Forum hot topics and false information dissemination after emergencies [4]. There are two core issues that must be solved to identify key users in persistent livelihood topics: (1) extraction of persistent topics and (2) the identification of key users. To solve the first issue, we combine the time dimension and apply the latent Dirichlet allocation (LDA) topic model and the short text similarity assessment modelto discover the persistent topics [5]. To solve the second, SNA provides a series of node metrics (e.g., central, prestige, trust and connectivity). The node position assessment, proposed by Przemysław Kazienko, is a very effective method for analysis, but it is more suitable for the global network while ignoring the semantic factors. Therefore, we provided the sentiment weighted node position algorithm (SWNP) and applied it to the persistent topic network to sort the users’ influence. | |

| − | The algorithm must solve several problems. First, it must ensure that the extraction topic is related to the clustering results, so the algorithm uses the LDA model and the short text similarity assessment model for screening and gathering related posts while adopting adjacent time slice cross matching to ensure the topic sustainability on the timeline. After cataloging the posts, corresponding participants and replies relations, the persistent topic social network can be built and expressed as (PTSN= | + | The algorithm must solve several problems. First, it must ensure that the extraction topic is related to the clustering results, so the algorithm uses the LDA model and the short text similarity assessment model for screening and gathering related posts while adopting adjacent time slice cross matching to ensure the topic sustainability on the timeline. After cataloging the posts, corresponding participants and replies relations, the persistent topic social network can be built and expressed as <math display="inline">(PTSN= V, E)</math>, where <math display="inline">V</math> and <math display="inline">E</math> represent the nodes and their relationships in the local network, respectively. It then identifies the critical nodes in the local network, which have the greatest amount of influence on the specific topic and other users. After attempting different methods on real three-year online Forum data, the SWNP is provided and compared to the typical method. |

| − | The rest of the paper is organized as follows. We briefly review related work in | + | The rest of the paper is organized as follows. We briefly review related work in Section 2. We then present an overview of LDA and the short text similarity assessment model in Section 3. In Section 4, we propose persistent topic key person analysis in online Forum software, with detailed explanations. We discuss detailed experimental results on the research corpus in Section 5, and we conclude this paper in Section 6. |

| − | + | ==2. Related work== | |

| − | =2. | + | |

| − | + | ===2.1 SNA in online Forum=== | |

| − | ==2.1 SNA in online Forum== | + | |

Online Forum is an important platform for information dissemination. A user publishes a post to express his/her views on a given event, and others can browse the posts and create his/her own to form a thread through a unique registration ID [2]. A very important element of posting is the ability to add comments, which enables discussions. Accessibility to posts is generally open, so anyone may read or comment. Online Forum is always busy with activity: every day, a large number of new users will register, and thousands of new posts and millions of new comments are written. The lifetime of posts is very short, and the relationships between users are very dynamic and temporal, providing a large amount of semantic information to explore intensely [6]. | Online Forum is an important platform for information dissemination. A user publishes a post to express his/her views on a given event, and others can browse the posts and create his/her own to form a thread through a unique registration ID [2]. A very important element of posting is the ability to add comments, which enables discussions. Accessibility to posts is generally open, so anyone may read or comment. Online Forum is always busy with activity: every day, a large number of new users will register, and thousands of new posts and millions of new comments are written. The lifetime of posts is very short, and the relationships between users are very dynamic and temporal, providing a large amount of semantic information to explore intensely [6]. | ||

| − | + | Research on online Forum is primarily rooted in public opinion guidance, sociology, linguistics and psychology, while data mining with technology is less frequently employed. However, nearly all online Forum websites record some basic statistics, which lend themselves well for data analysis and important findings. This network model, consisting of the board, posts and comments, can be analyzed by SNA to find the most important or influential users. Around such users, groups that share similar interests will form. | |

| − | + | There are many types of online Forum: campus online Forum, commercial online Forum, professional online Forum, emotional online Forum and individual online Forum. We chose the comprehensive Tianya forum as the basis for our research because persistent livelihood topics are more likely to occur in this active social online Forum. There is some research on the Tianya forum datasets, such as the opinion leader algorithm based on users’ interests, but the accuracy depends on the quality of the interest field [7]. | |

| − | + | ===2.2 Topic discovery=== | |

| − | ==2.2 Topic discovery== | + | |

| − | + | Some results have been achieved in the network topology and topic propagation models, but they are still new. Previous studies can be mainly divided into three categories: (1) the first type of research mainly focuses on the distribution of users to reveal their dynamic characteristics. (2) The second type of research mainly focuses on the topics of discovery and prediction. Wang [8] improved the information diffusion model based on topic influence, and proposed the topic diffusion trend prediction method based on the reply matrix. (3) The third type of research studies semantic communities for user characteristic analysis. Bu et al. [9] proposed a sock puppet detection algorithm that combines authorship-identification techniques with link analysis. | |

Compared to research around sudden hot issues, few studies consider persistent livelihood topic discovery, evolution and traceability. With the rapid dissemination of information, people’s livelihood topics will continue to ferment, and they will inevitably have an impact on the management of the networked public opinion without necessary regulatory and counseling. | Compared to research around sudden hot issues, few studies consider persistent livelihood topic discovery, evolution and traceability. With the rapid dissemination of information, people’s livelihood topics will continue to ferment, and they will inevitably have an impact on the management of the networked public opinion without necessary regulatory and counseling. | ||

| − | + | ===2.3 Key person extraction=== | |

| − | ==2.3 Key | + | |

| − | + | There are two separate approaches to key person extraction in social networks: those based on context roles and those based on social network structure. The most common key person extraction methods rely on various centrality measures for each separate node. However, these algorithms lack a holistic view, and the node position in the social community is determined by its neighborhoods, such as in degree prestige and degree centrality. Other algorithms are more global, such as proximity prestige, rank prestige, node position, eccentricity and closeness centrality. Much of this research has been applied to different domains (e.g., influence spread, public opinion analysis, and terrorist group analysis) [10]. | |

| − | In fact, the user influence is not solely determined by the overall network topology but confirmed by the local network structure and semantic relationships among active users. No existing algorithm can meet this demand, and because the entire network is not the best choice, the influence field must be determined before the key person may be extracted. The PTSN is a semantic-based local network, so we propose a node position algorithm combined with semantic information to identify key persons. | + | In fact, the user influence is not solely determined by the overall network topology but confirmed by the local network structure and semantic relationships among active users. No existing algorithm can meet this demand, and because the entire network is not the best choice, the influence field must be determined before the key person may be extracted. The <math display="inline">PTSN</math> is a semantic-based local network, so we propose a node position algorithm combined with semantic information to identify key persons. |

| − | + | ==3. Persistent topic extraction in social network== | |

| − | =3. | + | |

| − | + | To obtain the persistent livelihood topic in online Forum, two basic methods are introduced here. The first is the LDA model for extracting topics, and the second is the short text similarity assessment model to distinguish persistent topics and emergency ones. | |

| − | + | ===3.1 LDA=== | |

| − | ==3.1 LDA== | + | |

| − | + | In statistical natural language processing, one common way of modeling the contributions of different topics to a document is to treat each topic as a probability distribution over words, viewing a document as a probabilistic mixture of these topics. Given documents <math display="inline"> D </math> containing <math display="inline"> K </math> topics and <math display="inline"> N </math> unique words: <math display="inline">W= \{w_1,w_2,\ldots ,w_N\}</math>, where each <math display="inline">w_i</math> belongs to some document <math display="inline">d_i</math>, and <math display="inline">z_i</math> is a latent variable indicating the topic from which the ''i''-th word was drawn. The complete probability generative model is defined as follows: | |

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 73: | Line 69: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | <math>\ | + | | <math>\left\{ \begin{array}{c} |

| − | {\theta }^{\left(d\right)}\sim Dirichlet(\alpha )\\ | + | {\theta }^{\left(d\right)}\sim \hbox{Dirichlet } (\alpha )\\ |

| − | z_i\vert {\theta }^{\left(d_i\right)}\sim Multinomial({\theta }^{\left(d_i\right)})\\ | + | z_i\vert {\theta }^{\left(d_i\right)}\sim \hbox{Multinomial }({\theta }^{\left(d_i\right)})\\ |

| − | {\varphi }^{\left(z\right)}\sim Dirichlet(\beta )\\ | + | {\varphi }^{\left(z\right)}\sim \hbox{Dirichlet }(\beta )\\ |

| − | w_i\vert z_i,{\varphi }^{\left(z_i\right)}\sim Multinomial({\varphi }^{\left(z_i\right)}) | + | w_i\vert z_i,{\varphi }^{\left(z_i\right)}\sim \hbox{Multinomial }({\varphi }^{\left(z_i\right)}) |

| − | \end{array}</math> | + | \end{array}\right.</math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (1) | | style="width: 5px;text-align: right;white-space: nowrap;" | (1) | ||

|} | |} | ||

| + | Here, the hyperparameters <math>\alpha</math> and <math>\beta</math> are mainly used to control the sparsity of the distribution. According to this model, every word <math display="inline">w_i \in W</math> will be assigned to a latent topic <math display="inline">z_i</math>. | ||

| − | + | In a corpus, the goal of LDA is to extract the latent topic <math>z</math> through evaluating the posterior distribution. The sum in the denominator involves <math>T^n</math> terms, where <math>n</math> is the total number of word instances in the corpus. However, it does not factorize, so Gionline Forum sampling is now widely adopted. Gionline Forum sampling estimates the probability of a word belonging to a topic, according to the topic distribution of the other words. At the beginning of the sampling, every word is randomly assigned to a topic as the initial state of a Markov chain. Each state of the chain is an assignment of values to the variables being sampled. After enough interations, the chain approaches the target distribution and the current values are recorded as the expected probability distribution. In the end, it obtains the topic <math>T=\{t_1,t_2,\ldots , t_z\}</math> and <math>t_i=\{ (t_{i1}, p_{i1}),\ldots , (t_{ij,pij}),\ldots , (t_{iN},p_{iN})\}</math> where <math>t_{ij}</math> may appear in <math>t_{i}</math> with probability <math>p_{ij}</math>. | |

| − | + | ===3.2 Short text similarity assessment model=== | |

| − | + | Quan provides a short text on similarity computing methods based on probabilistic topics [11]. The algorithm uses a topic model on the short text feature vectors, then determines the semantic similarity by computing the cosine between the vectors. We improve the model for the online Forum title text using the minimum threshold and therefore require less computing cost. | |

| − | + | ||

| − | + | The model analyzes topics in two adjacent time periods, so let the former topics <math>T_{former}=\{t_1,\ldots , t_i, \ldots , t_n\}</math> and corresponding topic vector <math>t_i=\{ (t_{i1}, p_{i1}),\ldots , (t_{ij,pij}),\ldots , (t_{iN},p_{iN})\}</math>, the later ones <math>T_{Later}=\{t_1,\ldots , t_k\ldots , t_m\}</math>, and <math>t_k=\{(t_{k1},p_{k1}),\ldots , (t_{kl,pkl}),\ldots, (t_{kM},p_{kM})\}</math>. The existing similarity formula is not suitable, so Eq. (2) is used for this work. To obtain high similarity degree topics in adjacent time slots, <math>n\times m</math> calculation time is need, i.e., each topic is required to match with all topics in another time period | |

| − | + | ||

| − | + | ||

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 100: | Line 94: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | <math>s_{i,k}=\sum_{word\in t_i\cap t_k}min(p(word))</math> | + | | <math>s_{i,k}=\sum_{word\in t_i\cap t_k}\min(p(word))</math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (2) | | style="width: 5px;text-align: right;white-space: nowrap;" | (2) | ||

|} | |} | ||

| + | where <math>s_{i,k}</math> is the similarity degree of topic <math>t_i</math> and <math>t_k</math>, which equals the sum of the minimum probability of the words appearing in both topics. If <math>s_{i,k}</math> is larger than threshold <math> \sigma_1</math>, it means the two topics are similar. If a topic continues over some periods, it can be considered a persistent topic. | ||

| − | + | Meanwhile, the size of topic <math>t_i</math> in a certain period can be measured by Eq. (3) | |

| − | + | ||

| − | Meanwhile, the size of topic | + | |

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 121: | Line 114: | ||

| − | + | Here, the post title <math>d</math> is used to match the keywords of topic <math>t_i</math>, and then the sum of all the probabilities of success matching is the relevancy. If <math>r_{i,d}</math> is greater than <math> \sigma_2</math>, then the post is related to the topic. The thresholds <math> \sigma_1</math> and <math> \sigma_2</math> will be confirmed in the experiment. | |

| − | + | ==4. Analysis of key person in persistent topic with online Forum== | |

| − | =4. | + | |

| − | + | Two important issues in social network analysis are individual role and social position. Analysis of key persons in persistent topics with online Forum is further considered. | |

| − | Due to the time characteristics, the gathered data should be partitioned into subsequent | + | Due to the time characteristics, the gathered data should be partitioned into subsequent <math display="inline"> N </math> periods with the same length, which are always labeled from 0 to <math display="inline">N-1</math>, and these periods are separable or partly overlapped. In the experimental studies (see Section 5), we assumed that they have a length of 30 days. |

The LDA was used to obtain the topics in each period and extract the persistent topic across multi-periods through the similarity assessment. Then, for each persistent topic, the social network was generated and the fundamental SNA measures were calculated to identify the key person. | The LDA was used to obtain the topics in each period and extract the persistent topic across multi-periods through the similarity assessment. Then, for each persistent topic, the social network was generated and the fundamental SNA measures were calculated to identify the key person. | ||

| − | In the first step, the interaction data are partitioned into the subsets by month, and the LDA and filtering strategy are used to identify the topics from each partition. Then, the algorithm attempts to associate one topic with another from the neighboring period while fulfilling the criterion of having a similarity degree larger than | + | In the first step, the interaction data are partitioned into the subsets by month, and the LDA and filtering strategy are used to identify the topics from each partition. Then, the algorithm attempts to associate one topic with another from the neighboring period while fulfilling the criterion of having a similarity degree larger than <math> \sigma_1</math>. On the basis of this comparison, the persistent topics that exist for the sufficient number of periods are defined. Following this, the algorithm uses sentiment weighted node positions in the interaction data to identify the key person who has the most influence in the field. |

| − | + | The algorithm consists of six subsequent steps: | |

| − | ''Step 1. The gathered text stream should be partitioned into subsequent N periods with the same length.'' | + | ''Step 1. The gathered text stream should be partitioned into subsequent <math display="inline"> N </math> periods with the same length.'' |

| − | ''Step 2. Extract topics, and then record the relevant posts, users, reply rates etc. ''To achieve this, the algorithm LDA described in | + | ''Step 2. Extract topics, and then record the relevant posts, users, reply rates etc. ''To achieve this, the algorithm LDA described in Section 3.1 is used. The <math display="inline"> z </math> topics will be obtained in every time slice. |

| − | ''Step 3. Simplify the topics using the filtering strategy.'' For a given period | + | ''Step 3. Simplify the topics using the filtering strategy.'' For a given period <math display="inline"> ts </math>, after the attribute filter and topics set <math display="inline">N=Topic (ts)</math> are identified, each topic contains its keywords and the corresponding probability. The topic will be retained once it meets one of the following filtering strategies: |

| − | (1) The number of posts related to the topic ( | + | (1) The number of posts related to the topic (<math>r_{i,d}</math> larger than <math> \sigma_2</math>) is greater than or equal to 10. <math> \sigma_2</math> is 0.05 in Section 5, that is, the post is related if a post title contains a keyword of a certain topic. |

(2) The total number of users involved in the topic is greater than or equal to 10% of the active users of the period; | (2) The total number of users involved in the topic is greater than or equal to 10% of the active users of the period; | ||

| Line 150: | Line 142: | ||

(4) The response rate (the total participation of users divided by the total number of clicks) is greater than or equal to 30%; | (4) The response rate (the total participation of users divided by the total number of clicks) is greater than or equal to 30%; | ||

| − | ''Step 4. The topics in adjacent time are crossed matching. ''To achieve this, the short text similarity assessment model described in | + | ''Step 4. The topics in adjacent time are crossed matching. ''To achieve this, the short text similarity assessment model described in Section 3.2 is used. The <math> \sigma_1</math> is 0.09 in Section 5. |

| − | ''Step 5. Identify persistent topics that exist for a minimum period of time.'' Urgent topics have small time spans and simple network evolutions, which do not belong to the persistent topics that this article focuses on. Ephemeral topics do not last for more than two periods, but some may occur in the junction of two periods, so the | + | ''Step 5. Identify persistent topics that exist for a minimum period of time.'' Urgent topics have small time spans and simple network evolutions, which do not belong to the persistent topics that this article focuses on. Ephemeral topics do not last for more than two periods, but some may occur in the junction of two periods, so the <math display="inline"> ts_{req}</math> is defined as the minimum period for topic longevity. In these experiments, it is assumed that <math display="inline"> ts_{req}=3</math>. |

| − | A set of topics, which consists of similar topics during the periods | + | A set of topics, which consists of similar topics during the periods <math>j,j+1,\ldots ,j+s</math>, the number of topic-related posts and users are respectively defined as follows: |

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 161: | Line 153: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | <math>\ | + | | <math>\left\{ \begin{array}{c} |

| − | POST_i={{\sum }_{t=j}^{j+s}POST}_i(ts)\\ | + | POST_i={{\displaystyle\sum }_{t=j}^{j+s}POST}_i(ts)\\ |

USER=_{}^i{{\cup }_{t=j}^{j+s}USER}_i(ts) | USER=_{}^i{{\cup }_{t=j}^{j+s}USER}_i(ts) | ||

| − | \end{array}</math> | + | \end{array}\right.</math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (4) | | style="width: 5px;text-align: right;white-space: nowrap;" | (4) | ||

| Line 170: | Line 162: | ||

| − | + | ''Step 6. The key persons in the persistent topic are identified using SWNP. ''First, <math>PTSN= (V,E)</math> is built. The traditional node position algorithm has an experimental basis for large-scale data [12] but does not consider interest, topics and sentiment factors, so this paper provides <math>SWNP(x)</math> to estimate the importance of the node <math display="inline"> x </math> in a local network. | |

Every term/phrase is manually assigned a value between 0 and 1 according to its tone. Oppressive terms range between 0.5 and 1, and a higher value corresponds to a greater degree of oppression. Supportive terms range between 0 and 0.5, and a smaller value corresponds to a greater degree of support. If the phrase is neutral, it is assigned a value of 0.5. | Every term/phrase is manually assigned a value between 0 and 1 according to its tone. Oppressive terms range between 0.5 and 1, and a higher value corresponds to a greater degree of oppression. Supportive terms range between 0 and 0.5, and a smaller value corresponds to a greater degree of support. If the phrase is neutral, it is assigned a value of 0.5. | ||

| Line 181: | Line 173: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | <math>sentiment_{i,j}=\frac{{\sum }_{k=1}^{n^j}O_{k,j}}{n^j}</math> | + | | <math>sentiment_{i,j}=\frac{{\displaystyle\sum }_{k=1}^{n^j}O_{k,j}}{n^j}</math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (5) | | style="width: 5px;text-align: right;white-space: nowrap;" | (5) | ||

|} | |} | ||

| − | + | where <math display="inline">O_{k,j}</math> is the emotional word weight in comments from <math display="inline"> i </math> to <math display="inline"> j </math>, <math display="inline">n_j</math> is the number of emotional words in all the comments from <math display="inline"> i </math> to <math display="inline"> j </math>. The <math display="inline">sentiment_{i,j}>0.5</math> indicates a negative emotional tendency with a negative commitment function, and <math display="inline">sentiment_{i,j}\le 0.5</math> indicates a positive commitment function. The <math>SWNP(x)</math> can be redefined as follows: | |

| − | < | + | |

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 199: | Line 190: | ||

|} | |} | ||

| + | where <math display="inline">Y_x x</math>’s nearest neighbors, i.e., nodes that are in the direct relation to <math display="inline"> x </math>; <math display="inline">C(y\to x)</math> is the commitment function; <math display="inline"> \varepsilon</math> is the constant coefficient in the range [0,1], and its value denotes the openness of node position measurement on external influences: a smaller value indicates that <math display="inline">x</math>’s node position is more static and independent while a larger value means that the node position is more influenced by others. | ||

| − | < | + | The value of the commitment function <math display="inline">C(y\to x)</math> in ''PTSN'' must satisfy the following set of criteria: |

| − | + | (1) The value of commitment is from the range <math display="inline">[-1;1]: (x,y\in V)C(y\to x)\in [-1;1]</math>. | |

| − | + | (2) The sum of all commitments' absolute values must be equal to 1 in the case of each node in the network: <math>(x\in V)\sum _{x\in V} |C(y\to x)| =1</math>. | |

| − | ( | + | (3) The commitment to oneself is <math display="inline">0:(x\in V) C(x\to x)=0</math>. |

| − | ( | + | (4) If there is no relationship from <math display="inline">y to x</math>, <math>then C(y\to x) =0</math>. |

| − | ( | + | (5) If a member <math display="inline"> y </math> is not active with respect to anybody and other <math display="inline">n </math> members <math display="inline"> x_i </math>, <math>i=1,\ldots ,n</math> are active with respect to <math display="inline"> y </math>, then instead of satisfying the above criterion 4, the commitment value is distributed equally among all of <math display="inline"> y </math>’s ''acquaintances'' <math display="inline"> x_i </math>, i.e., <math display="inline">(x\in V) C(y\to x_i ) =1/n</math>. |

| − | + | Some comments in online Forum are always presented without a clear view, so based on this consideration, we believe that comments labeled with strong emotions tend to communicate more information and therefore should attract greater attention. As such, if <math display="inline">x\to y</math> shows the reply relationship from <math display="inline"> x </math> to <math display="inline"> y </math>, we assume that comments with strong emotions should transmit a greater commitment than just a passing glance. There are three specific cases: | |

| − | < | + | (1) if <math display="inline">x\to y</math> meets the strong negative (0.8, 1] or strong positive [0,0.2), <math display="inline">n_2=4n_1</math>; |

| − | < | + | (2) if <math display="inline">x\to y</math> satisfies the general negative (0.6,0.8] or general positive [0.2,0.4), <math display="inline">n_2=2n_1</math>; |

| − | ( | + | (3) if <math display="inline">x\to y</math> belongs to relatively neutral [0.4, 0.6], <math display="inline">n_2=n_1</math>; |

| − | + | where <math display="inline">n_1</math> is the total response number from <math display="inline"> x </math> to <math display="inline"> y </math>, and <math display="inline">n_2</math> is the comments numbers after emotional weighted. | |

| − | + | The value of the commitment function <math display="inline">C (x\to y)</math> can be evaluated as the normalized sum of all activities from <math display="inline"> x </math> to <math display="inline"> y </math> in relation to all activities of <math display="inline"> x </math>: | |

| − | + | ||

| − | The value of the commitment function C( | + | |

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 231: | Line 221: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | <math>C(x\rightarrow y)=\frac{A(x\rightarrow y)}{{\sum }_{j=1}^mA(x\rightarrow y_j)}</math> | + | | <math>C(x\rightarrow y)=\frac{A(x\rightarrow y)}{{\displaystyle\sum }_{j=1}^mA(x\rightarrow y_j)}</math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (7) | | style="width: 5px;text-align: right;white-space: nowrap;" | (7) | ||

|} | |} | ||

| + | where <math display="inline"> m </math> is the number of all nodes within the <math display="inline">PTSN, A(x\to y)</math> is the function that denotes the activity of node <math display="inline"> x </math> directed to node <math display="inline"> y </math>, such as the number of comments from <math display="inline"> x </math> to <math display="inline"> y </math>. Using the emotional weighted <math display="inline">n_2</math> instead of <math display="inline">n_1</math> is more conducive to finding an important node in the semantic web. | ||

| − | + | ==5. Experiments== | |

| − | + | ===5.1 Data set=== | |

| − | + | The dataset is from the Tianya forum ([http://focus.tianya.cn http://focus.tianya.cn]), which is a popular bulletin-board service in China. It includes more than 300 boards, and the total number of registered user identifications (IDs) exceeds 32 million. Since its introduction in 1999, it has become the leading social-networking site in China due to its openness and freedom. We selected the Tianya By-talk board and collected data between January 2011 and December 2013 including 325288 users, 102756 posts and 4524756 replies. Among all the users, 12701 of them wrote at least 1 post in the period, 3724 wrote at least 2 posts and 573 at least 5 posts. Taking into consideration the users who wrote at least 1 post, the average number of posts for each user was 8.09. Most of the users’ behavior consisted of replying to posts or even just browsing; the average number of comments for all users equaled 13.91, which was still greater than 8.09. | |

| − | + | The largest hot topic post has 6571731 clicks and 66274 comments, and 71929 posts have more than 5 comments. In 2011 through 2013, 176346 users wrote at least one comment, 110261 wrote more than one comment, and the most active user posted 10276 comments. Considering only posts that have at least 5 comments, the average number of comments per post was 62.91. In 2011, users wrote 10324 posts and 400571 comments (38.8 comments/post); in 2012, they wrote 31146 posts and 1326819 comments (42.6 comments/post); and in 2013, they wrote 61286 posts and 2797366 comments (45.6 comments/post). | |

| − | + | ||

| − | + | ===5.2 Identification of the topics in specific periods=== | |

| − | < | + | We used the LDA to identify the topic in specific months, setting <math display="inline">\alpha =0.5,\, \beta =0.1</math>, topic number <math display="inline">Z=50</math> and Gionline Forum sampling iterations to 1000. Not all of each month's topics are related to the livelihood issues that this article focuses on, so these topics are omitted by the attribute filter described in Section 4. |

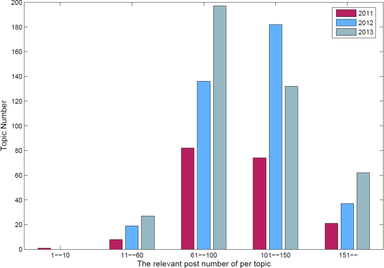

| − | < | + | After applying this attribute filter, there were a total of 978 topics with an average of 27 topics per month. The minimum number occurred in the 10<sup>th</sup> month with 9 topics and maximum was in the 6<sup>th</sup> month with 37 topics. To analyze the size of each topic, [[#img-1|Figure 1]] shows the statistics on the number of topics related posts. Setting <math>\sigma_{2}=0.05</math> retains more valid data for extracting persistent topics that is, if a title contains a keyword related to a certain topic, it will be retained. Eighty-two percent of retained topics ranged in size from 61 to 150 related posts. |

| − | + | ||

| − | < | + | <div id='img-1'></div> |

| − | + | {| class="wikitable" style="margin: 0em auto 0.1em auto;border-collapse: collapse;width:auto;" | |

| − | + | |-style="background:white;" | |

| − | + | |style="text-align: center;padding:10px;"| [[Image:Draft_LIN_956018287-image8.png|384px]] | |

| − | + | |- | |

| − | + | | style="background:#efefef;text-align:left;padding:10px;font-size: 85%;"| '''Figure 1'''. The related posts number for each topic | |

| − | + | |} | |

| − | + | ||

| − | + | ||

| − | + | ===5.3 Identification of the persistent topic=== | |

| − | ==5.3 Identification of the persistent topic== | + | |

| − | + | The next analysis concerned the identification of the persistent topics, which must exist over a given period. The persistent topic number is affected by <math>\sigma_1</math>. The keyword of a topic always has a frequency of approximately 0.05, while a similarity of 0.1 means the topics have at least two keywords, and then it can be certain that they are in fact the same. Experiments have proven that an important turning point occurs at <math>\sigma_1=0.09</math>, corresponding to the 18 relatively persistent topics. The persistent topics have high accuracy and quality by manual validation. | |

| − | + | There are 18 persistent topics with 4637 related posts. A total of 91281 users (28% of total users) were present in the following analysis, which greatly reduces the data size for further analysis. There are 257 related posts per persistent topic, and according to the minimum period (three months), they have only 86 posts per topic per month. This number is less than the size of the general topics retained in Section 5.2, which also reflects the persistent topics that do not have a high post rate, click rate or response rate and instead have their own characteristics of long duration. | |

| − | + | ===5.4 Analysis of duration time of the persistent topic=== | |

| − | ==5.4 Analysis of duration time of the persistent topic== | + | |

| − | + | Thirteen persistent topics (72%) lasted for 3 months, which is the minimum duration necessary to consider the topic as a persistent one in our analysis. Four persistent topics lasted exactly 4 months, and the longest lasted 5 months. The distribution of persistent topics is relatively uniform; only in May 2013 (the 29th month) and June 2013 (the 30th month) was there four co-existing persistent topics. Data analysis found that this was during the time of graduation season and the university entrance exam. Additionally, youth films such as “So Young” and singing reality shows such as “X Factor” and “Chinese Idol” caused such topics to remain hot and evolve continuously around this time, although topic evolution is beyond our research. | |

| − | + | At the same time, the obtained persistent topics have high diversity, for there is little overlap within the same period. Though two topics with interval time may be similar, they are apparently two different events. Issues concerning graduation, college entrance examinations and employment will repeat themselves every year in different fashions, although this type of topic evolution analysis is not within the scope of this study. Therefore, this algorithm ensures diversity among the persistent topics. | |

| − | + | ===5.5 Persistent topic social network (PTSN)=== | |

| − | ==5.5 Persistent topic social | + | |

| − | + | The goal on the next analysis is to count the posts and the users in the persistent topic. [[#tab-1|Table 1]] shows the basic information of 18 persistent topics, and there are 257 posts and 5071 users per persistent topic. Social network <math>PTSN=(V, E)</math> can be built for each persistent topic, where <math display="inline"> V </math> is a finite set of registered users who take part in the topic (i.e., the ''IDs''), and <math display="inline"> E </math> is a finite set of social relationships (i.e., posts and replies). | |

| − | + | <div class="center" style="font-size: 75%;">'''Table 1'''. The basic information of the persistent topic</div> | |

| − | + | ||

| − | {| style=" | + | <div id='tab-1'></div> |

| + | {| class="wikitable" style="margin: 1em auto 0.1em auto;border-collapse: collapse;font-size:85%;width:auto;" | ||

| + | |-style="text-align:center" | ||

| + | ! No. !! Periods !! Posts !! Users | ||

|- | |- | ||

| − | | style=" | + | | style="text-align: center;"|1 |

| − | | style=" | + | | style="text-align: center;"|3 |

| − | | style=" | + | | style="text-align: center;"|246 |

| − | | style=" | + | | style="text-align: center;"|4835 |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style=" | + | | style="text-align: center;"|2 |

| − | | style=" | + | | style="text-align: center;"|3 |

| − | | style=" | + | | style="text-align: center;"|202 |

| − | | style=" | + | | style="text-align: center;"|5124 |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style="text-align: center;"| | + | | style="text-align: center;"|3 |

| − | + | | style="text-align: center;"|3 | |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|316 |

| − | + | | style="text-align: center;"|6147 | |

| − | + | ||

| − | + | ||

| − | | style="text-align: center;"| | + | |

| − | | style="text-align: center;"| | + | |

|- | |- | ||

| − | | style="text-align: center;"| | + | | style="text-align: center;"|4 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|4 |

| − | + | | style="text-align: center;"|340 | |

| − | + | | style="text-align: center;"|5410 | |

| − | + | ||

| − | + | ||

| − | | style="text-align: center;"| | + | |

| − | | style="text-align: center;"| | + | |

|- | |- | ||

| − | | style="text-align: center;"| | + | | style="text-align: center;"|5 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|3 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|198 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|4105 |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style="text-align: center;"| | + | | style="text-align: center;"|6 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|3 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|279 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|4716 |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style="text-align: center;"| | + | | style="text-align: center;"|7 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|3 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|248 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|6124 |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style="text-align: center;"| | + | | style="text-align: center;"|8 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|5 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|336 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|4398 |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style="text-align: center;"| | + | | style="text-align: center;"|9 |

| − | | style="text-align: center;"| | + | | style="text-align: center;"|3 |

| − | + | | style="text-align: center;"|187 | |

| − | + | | style="text-align: center;"|5627 | |

| − | + | ||

| − | + | ||

| − | | style="text-align: center;"| | + | |

| − | | style="text-align: center;"| | + | |

|- | |- | ||

| − | | style=" | + | | style="text-align: center;"|10 |

| − | | style=" | + | | style="text-align: center;"|3 |

| − | | style=" | + | | style="text-align: center;"|268 |

| − | | style=" | + | | style="text-align: center;"|4981 |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | | + | | style="text-align: center;"|11 |

| − | | style=" | + | | style="text-align: center;"|3 |

| − | + | | style="text-align: center;"|227 | |

| − | + | | style="text-align: center;"|5671 | |

| − | | style=" | + | |

| − | | style=" | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style=" | + | | style="text-align: center;"|12 |

| − | + | | style="text-align: center;"|4 | |

| − | + | | style="text-align: center;"|249 | |

| − | + | | style="text-align: center;"|5019 | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | | style=" | + | |

| − | + | ||

| − | + | ||

| − | | style=" | + | |

| − | + | ||

| − | + | ||

| − | | style=" | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style="text-align: center | + | | style="text-align: center;"|13 |

| − | | style="text-align: center | + | | style="text-align: center;"|4 |

| − | + | | style="text-align: center;"|342 | |

| − | + | | style="text-align: center;"|3957 | |

| − | | style="text-align: center | + | |

| − | + | ||

| − | + | ||

| − | | style="text-align: center | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style="text-align: center | + | | style="text-align: center;"|14 |

| − | | style="text-align: center | + | | style="text-align: center;"|3 |

| − | + | | style="text-align: center;"|179 | |

| − | + | | style="text-align: center;"|6105 | |

| − | | style="text-align: center | + | |

| − | + | ||

| − | + | ||

| − | | style="text-align: center | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style="text-align: center | + | | style="text-align: center;"|15 |

| − | | style="text-align: center | + | | style="text-align: center;"|4 |

| − | + | | style="text-align: center;"|283 | |

| − | + | | style="text-align: center;"|4281 | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | | style="text-align: center | + | |

| − | + | ||

| − | + | ||

| − | | style="text-align: center | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style="text-align: center | + | | style="text-align: center;"|16 |

| − | | style="text-align: center | + | | style="text-align: center;"|3 |

| − | + | | style="text-align: center;"|305 | |

| − | + | | style="text-align: center;"|6289 | |

| − | | style="text-align: center | + | |- |

| − | + | | style="text-align: center;"|17 | |

| − | + | | style="text-align: center;"|3 | |

| − | | style="text-align: center | + | | style="text-align: center;"|269 |

| − | + | | style="text-align: center;"|5042 | |

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | | style="text-align: center | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | | style="text-align: center | + | |

| − | + | ||

| − | + | ||

| − | | style="text-align: center | + | |

| − | + | ||

| − | + | ||

| − | | style="text-align: center | + | |

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style="text-align: center | + | | style="text-align: center;"|18 |

| − | | style="text-align: center | + | | style="text-align: center;"|3 |

| + | | style="text-align: center;"|163 | ||

| + | | style="text-align: center;"|3450 | ||

| + | |} | ||

| − | |||

| − | |||

| − | + | ===5.6 Node position iterative data processing=== | |

| − | + | ||

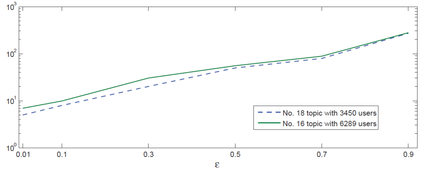

| − | < | + | The experiments revealed that the number of iterations necessary to calculate the node positions for all users in each <math>PTSN</math> depends on the value of the parameter <math>\varepsilon </math> (Eq.(6)): the greater the value of <math>\varepsilon</math>, the greater the number of iterations ([[#img-2|Figure 2]]). Each node was initialized in <math>PTSN</math> with <math> SWNP=1</math> and the stop condition <math>\tau =0.00001</math>. The iterative processing of <math> SWNP</math> uses six different <math>\varepsilon (0.01, 0.1, 0.3, 0.5, 0.7, \, \hbox{and } 0.9)</math> for comparative analysis. Because the given 18 <math>PTSN</math>s have similar sizes, their tendencies are similar. |

| − | + | ||

| − | < | + | <div id='img-2'></div> |

| − | | | + | {| class="wikitable" style="margin: 0em auto 0.1em auto;border-collapse: collapse;width:auto;" |

| − | + | |-style="background:white;" | |

| − | + | |style="text-align: center;padding:10px;"| [[Image:Draft_LIN_956018287-image9.png|426px]] | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | | + | | style="background:#efefef;text-align:left;padding:10px;font-size: 85%;"| '''Figure 2'''. The number of iterations in relation to <math>\varepsilon </math> |

| − | + | |} | |

| − | + | ||

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | |||

| − | |||

| − | < | + | The experiments revealed that the <math> SWNP</math> does not increase the number of iterations and processing time compared with <math> NP</math>. Because the sentiment analysis only gives every comment a one-off score to determine its emotional inclination (positive or negative), linearly enhancing the corresponding comments without a change in the iteration processing simply adds a linear time complexity to the iterative process. For a clearer demonstration, the <math> SWNP</math> value generally refers to the absolute value except in particular emphasis. Next, the distribution characteristics of the <math> SWNP</math> are analyzed to discover the important nodes. |

| − | + | ||

| − | + | ===5.7 Distribution characteristics of SWNP=== | |

| − | + | ||

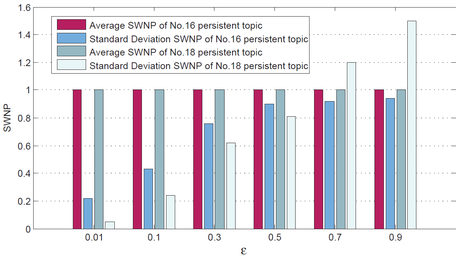

| − | < | + | Experiments analyze the distribution characteristics of <math> SWNP</math> in <math>18\, PTSN</math>, and [[#img-3|Figure 3]] gives the average <math> SWNP</math> and their standard deviation in <math>No.16</math> and <math>No.18\,PTSN</math> with different <math>\varepsilon</math>. The average SWNP does not depend on <math>\varepsilon</math>, and it can be formally demonstrated that the SWNP equals approximately 1 in all cases. On the other hand, the standard deviation differs substantially depending on <math>\varepsilon</math>: the greater the <math>\varepsilon</math>, the greater the standard deviation. Namely, the <math> SWNP</math> value has increased disproportionately with bigger <math>\varepsilon</math>, which has been proven by the experimental data. |

| − | + | ||

| − | < | + | <div id='img-3'></div> |

| + | {| class="wikitable" style="margin: 0em auto 0.1em auto;border-collapse: collapse;width:auto;" | ||

| + | |-style="background:white;" | ||

| + | |style="text-align: center;padding:10px;"| [[Image:Draft_LIN_956018287-image10.png|456px]] | ||

|- | |- | ||

| − | | | + | | style="background:#efefef;text-align:left;padding:10px;font-size: 85%;"| '''Figure 3'''. Average <math> SWNP</math> and their standard deviations in relation to <math>\varepsilon</math> |

| − | + | |} | |

| − | |||

| − | |||

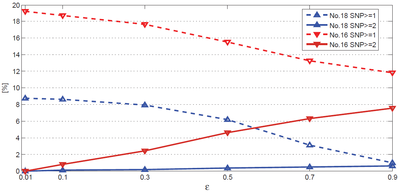

| − | < | + | The distribution characteristics of <math> SWNP</math> are determined by its network topology structure; for example, the standard deviation variation tendency of <math>No.18</math> is more noticeable than <math>No.16</math>. This result indicates the greater difference of <math> SWNP</math> in <math>No.18\, PTSN</math>, as there are a few nodes with ultra value. It can also be noted that the average <math> SWNP</math> over 81% of users is less than 1. This result means that only a few members exceed the average value that equals 1. This result also shows that the members’ <math> SWNP</math> difference increased for greater <math>\varepsilon</math>, and it is valid for all the <math>18\,PTSN</math>. The <math>No.18 \, PTSN</math> has the standard deviations of the most obvious change: while <math>\varepsilon =0.9</math>, fewer than 1% of users have a <math> SWNP >1</math>, and these users are clearly important. [[#img-4|Figure 4]] shows the percentage of users with <math> SWNP\ge 1</math> and <math> SWNP\ge 2</math> within <math>No.18</math> and <math>No.16 \,PTSN</math> in relation to <math>\varepsilon</math>. |

| − | + | ||

| − | < | + | <div id='img-4'></div> |

| − | | | + | {| class="wikitable" style="margin: 0em auto 0.1em auto;border-collapse: collapse;width:auto;" |

| − | + | |-style="background:white;" | |

| − | + | |style="text-align: center;padding:10px;"| [[Image:Draft_LIN_956018287-image11.png|402px]] | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | | + | | style="background:#efefef;text-align:left;padding:10px;font-size: 85%;"| '''Figure 4'''. The percentage of users with <math> SWNP\ge 1</math> and <math> SWNP\ge 2</math> within<math>No.18</math> and <math>No.16\, PTSN</math> in relation to <math>\varepsilon</math> |

| − | + | |} | |

| − | |||

| − | |||

| − | < | + | It can be seen that the different <math>PTSN</math>s have the same <math> SWNP</math> distribution trend, with the <math> SWNP\ge 1</math> nodes decreasing and <math> SWNP\ge 2</math> nodes increasing. The average percentage of nodes with <math> SWNP\ge 2</math> is 4.7% in all the <math>18\,PTSN</math> (<math>No.16</math> with 7.54% and <math>No.18</math> with 0.57%). This conclusion can help us identify the important nodes in persistent topic social networks. The percent of <math> SWNP\ge 1</math> and <math> SWNP\ge 2</math> are 3.12% and 0.49% in <math>No.18 \,PTSN</math> while <math>\varepsilon =0.7</math>, so it can be assured that 3.12% users are active users and the 0.49% users are key person in this topic. In fact, the greater the <math>\varepsilon</math>, the more distinguishable the results, but the larger number of iterations directly influences the processing time. Generally, the parameter is determined by the different network scales, but the nodes with high <math> SWNP</math> values do not necessarily represent key persons, as the adjacent nodes may pass a lot of negative energy (if the commitment function is less than 0). Therefore, sentiment analysis is needed to actually identify the key persons. |

| − | + | ||

| − | + | ===5.8 The Top N key persons in PTSN=== | |

| − | + | ||

| − | < | + | Extracting the Top <math display="inline"> N </math> key persons in <math>PTSN</math> is achieved through a ranking nodes process based on the importance degree. The algorithm sorts the nodes according to the <math> SWNP</math>, and then modifies the list using the emotional attributes. The comparing algorithms mainly used are <math> IDC </math> (Indegree Prestige Centrality), <math>ODC </math> (Outdegree Prestige Centrality) and <math>PR</math> (PageRank). <math> IDC </math> is based on the indegree number, so it takes into account the number of members that are adjacent to a particular member of the community, as follows: <math display="inline">IDC(x) =i(x)/(m-1)</math>, where <math display="inline"> m </math> is the number of nodes in the network, and <math>i(x)</math> is the number of members from the first level neighborhood that are adjacent to <math display="inline"> x</math>. In other words, more prominent people receive more nominations from members of the community. <math>ODC </math> takes into account the outdegree number of the member <math display="inline"> x</math> for edges that are directed to the given node, as follows: <math display="inline">ODC(x) =o(x)/(m-1)</math>, where <math display="inline">o(x)</math> is the number of the first level neighbors to <math display="inline"> x</math>. On the other hand, users who have low outdegree centrality are not very open to the external world and do not communicate with many members. <math>ODC </math> and <math> IDC </math> are the simplest and most intuitive measures that can be used in network analysis. Google uses <math>PR</math> to rank the pages in its search engine to measure the importance of a particular page to the others. [[#tab-2|Table 2]] gives the top 10 important nodes using different methods in the <math>No.16\, PTSN</math> with 3450 nodes. |

| − | + | ||

| − | < | + | <div class="center" style="font-size: 75%;">'''Table 2'''. Top 10 users in ''No.18 PTSN''</div> |

| − | + | ||

| − | < | + | <div id='tab-1'></div> |

| − | | | + | {| class="wikitable" style="margin: 1em auto 0.1em auto;border-collapse: collapse;font-size:85%;width:auto;" |

| − | + | |-style="text-align:center" | |

| − | + | ! colspan='2' style="text-align: center;vertical-align: top;"|Pos.!! <math>\varepsilon =0.01</math> !! <math>\varepsilon =0.1</math> !! <math>\varepsilon =0.3</math> !! <math>\varepsilon =0.5</math> !! <math>\varepsilon =0.7</math> !! <math>\varepsilon =0.9</math> !! <math> IDC </math> !! <math>ODC </math> !! <math>PR</math> | |

| − | + | ||

| − | + | ||

| − | < | + | |

| − | + | ||

| − | + | ||

| − | < | + | |

| − | + | ||

| − | + | ||

| − | < | + | |

|- | |- | ||

| − | | style=" | + | | style="text-align: center;vertical-align: top;"|1 |

| − | | style=" | + | | style="text-align: center;vertical-align: top;"|ID <br> Val. |

| + | | style="text-align: center;vertical-align: top;"|122756 <br> 1.834 | ||

| + | | style="text-align: center;vertical-align: top;"|'''22614''' <br> '''5.634''' | ||

| + | | style="text-align: center;vertical-align: top;"|307146 <br> 12.458 | ||

| + | | style="text-align: center;vertical-align: top;"|307146 <br> 15.762 | ||

| + | | style="text-align: center;vertical-align: top;"|8961 <br> 20.546 | ||

| + | | style="text-align: center;vertical-align: top;"|8961 <br> 25.874 | ||

| + | | style="text-align: center;vertical-align: top;"|14864 <br> 0.214 | ||

| + | | style="text-align: center;vertical-align: top;"|7996 <br> 0.130 | ||

| + | | style="text-align: center;vertical-align: top;"|22614 <br> 0.0133 | ||

| + | |- | ||

| + | | style="text-align: center;vertical-align: top;"|2 | ||

| + | | style="text-align: center;vertical-align: top;"|ID <br> Val. | ||

| + | | style="text-align: center;vertical-align: top;"|235523 <br> 1.627 | ||

| + | | style="text-align: center;vertical-align: top;"|307146<br>5.301 | ||

| + | | style="text-align: center;vertical-align: top;"|8961<br>12.041 | ||

| + | | style="text-align: center;vertical-align: top;"|8961<br>15.240 | ||

| + | | style="text-align: center;vertical-align: top;"|307146<br>20.121 | ||

| + | | style="text-align: center;vertical-align: top;"|307146<br>21.371 | ||

| + | | style="text-align: center;vertical-align: top;"|248153<br>0.197 | ||

| + | | style="text-align: center;vertical-align: top;"|200416<br>0.124 | ||

| + | | style="text-align: center;vertical-align: top;"|70064<br>0.0105 | ||

| + | |- | ||

| + | | style="text-align: center;vertical-align: top;"|3 | ||

| + | | style="text-align: center;vertical-align: top;"|ID<br>Val. | ||

| + | | style="text-align: center;vertical-align: top;"|57681<br>1.526 | ||

| + | | style="text-align: center;vertical-align: top;"|8961<br>4.982 | ||

| + | | style="text-align: center;vertical-align: top;"|20547<br>11.878 | ||

| + | | style="text-align: center;vertical-align: top;"|20547<br>15.046 | ||

| + | | style="text-align: center;vertical-align: top;"|20547<br>16.824 | ||

| + | | style="text-align: center;vertical-align: top;"|196349<br>18.627 | ||

| + | | style="text-align: center;vertical-align: top;"|84134<br>0.182 | ||

| + | | style="text-align: center;vertical-align: top;"|14267<br>0.120 | ||

| + | | style="text-align: center;vertical-align: top;"|89712<br>0.0092 | ||

| + | |- | ||

| + | | style="text-align: center;vertical-align: top;"|4 | ||

| + | | style="text-align: center;vertical-align: top;"|ID<br>Val. | ||

| + | | style="text-align: center;vertical-align: top;"|'''22614'''<br>'''1.475''' | ||

| + | | style="text-align: center;vertical-align: top;"|20547<br>4.870 | ||

| + | | style="text-align: center;vertical-align: top;"|'''22614'''<br>'''11.526''' | ||

| + | | style="text-align: center;vertical-align: top;"|'''22614'''<br>'''14.872''' | ||

| + | | style="text-align: center;vertical-align: top;"|'''22614'''<br>'''16.345''' | ||

| + | | style="text-align: center;vertical-align: top;"|276482<br>18.064 | ||

| + | | style="text-align: center;vertical-align: top;"|33224<br>0.176 | ||

| + | | style="text-align: center;vertical-align: top;"|14864<br>0.106 | ||

| + | | style="text-align: center;vertical-align: top;"|6401<br>0.0088 | ||

| + | |- | ||

| + | | style="text-align: center;vertical-align: top;"|5 | ||

| + | | style="text-align: center;vertical-align: top;"|ID<br>Val. | ||

| + | | style="text-align: center;vertical-align: top;"|307146<br>1.404 | ||

| + | | style="text-align: center;vertical-align: top;"|57681<br>4.633 | ||

| + | | style="text-align: center;vertical-align: top;"|276482<br>10.954 | ||

| + | | style="text-align: center;vertical-align: top;"|57681<br>14.534 | ||

| + | | style="text-align: center;vertical-align: top;"|57681<br>15.015 | ||

| + | | style="text-align: center;vertical-align: top;"|20547<br>17.349 | ||

| + | | style="text-align: center;vertical-align: top;"|313375<br>0.172 | ||

| + | | style="text-align: center;vertical-align: top;"|9246<br>0.095 | ||

| + | | style="text-align: center;vertical-align: top;"|85216<br>0.0080 | ||

| + | |- | ||

| + | | style="text-align: center;vertical-align: top;"|6 | ||

| + | | style="text-align: center;vertical-align: top;"|ID<br>Val. | ||

| + | | style="text-align: center;vertical-align: top;"|8961<br>1.377 | ||

| + | | style="text-align: center;vertical-align: top;"|276482<br>4.315 | ||

| + | | style="text-align: center;vertical-align: top;"|235523<br>10.467 | ||

| + | | style="text-align: center;vertical-align: top;"|122756<br>13.801 | ||

| + | | style="text-align: center;vertical-align: top;"|122756<br>15.246 | ||

| + | | style="text-align: center;vertical-align: top;"|235523<br>16.202 | ||

| + | | style="text-align: center;vertical-align: top;"|51229<br>0.154 | ||

| + | | style="text-align: center;vertical-align: top;"|81820<br>0.087 | ||

| + | | style="text-align: center;vertical-align: top;"|578<br>0.0078 | ||

| + | |- | ||

| + | | style="text-align: center;vertical-align: top;"|7 | ||

| + | | style="text-align: center;vertical-align: top;"|ID<br>Val. | ||

| + | | style="text-align: center;vertical-align: top;"|276482<br>1.306 | ||

| + | | style="text-align: center;vertical-align: top;"|122756<br>4.157 | ||

| + | | style="text-align: center;vertical-align: top;"|122756<br>10.348 | ||

| + | | style="text-align: center;vertical-align: top;"|235523<br>13.008 | ||

| + | | style="text-align: center;vertical-align: top;"|235523<br>15.205 | ||

| + | | style="text-align: center;vertical-align: top;"|70064<br>15.977 | ||

| + | | style="text-align: center;vertical-align: top;"|52166<br>0.143 | ||

| + | | style="text-align: center;vertical-align: top;"|241357<br>0.084 | ||

| + | | style="text-align: center;vertical-align: top;"|3601<br>0.0076 | ||

| + | |- | ||

| + | | style="text-align: center;vertical-align: top;"|8 | ||

| + | | style="text-align: center;vertical-align: top;"|ID<br>Val. | ||

| + | | style="text-align: center;vertical-align: top;"|20547<br>1.288 | ||

| + | | style="text-align: center;vertical-align: top;"|235523<br>3.946 | ||

| + | | style="text-align: center;vertical-align: top;"|57681<br>8.002 | ||

| + | | style="text-align: center;vertical-align: top;"|276482<br>11.328 | ||

| + | | style="text-align: center;vertical-align: top;"|196349<br>14.548 | ||

| + | | style="text-align: center;vertical-align: top;"|57681<br>15.279 | ||

| + | | style="text-align: center;vertical-align: top;"|7996<br>0.132 | ||

| + | | style="text-align: center;vertical-align: top;"|120608<br>0.079 | ||

| + | | style="text-align: center;vertical-align: top;"|14027<br>0.0073 | ||

| + | |- | ||

| + | | style="text-align: center;vertical-align: top;"|9 | ||

| + | | style="text-align: center;vertical-align: top;"|ID<br>Val. | ||

| + | | style="text-align: center;vertical-align: top;"|196349<br>1.270 | ||

| + | | style="text-align: center;vertical-align: top;"|196349<br>3.415 | ||

| + | | style="text-align: center;vertical-align: top;"|70064<br>7.856 | ||

| + | | style="text-align: center;vertical-align: top;"|70064<br>11.340 | ||

| + | | style="text-align: center;vertical-align: top;"|314627<br>13.851 | ||

| + | | style="text-align: center;vertical-align: top;"|122756<br>14.675 | ||

| + | | style="text-align: center;vertical-align: top;"|921712<br>0.121 | ||

| + | | style="text-align: center;vertical-align: top;"|122412<br>0.070 | ||

| + | | style="text-align: center;vertical-align: top;"|39240<br>0.0070 | ||

| + | |- | ||

| + | | style="text-align: center;vertical-align: top;"|10 | ||

| + | | style="text-align: center;vertical-align: top;"|ID<br>Val. | ||

| + | | style="text-align: center;vertical-align: top;"|70064<br>1.256 | ||

| + | | style="text-align: center;vertical-align: top;"|70064<br>3.097 | ||

| + | | style="text-align: center;vertical-align: top;"|196349<br>6.912 | ||

| + | | style="text-align: center;vertical-align: top;"|196349<br>9.282 | ||

| + | | style="text-align: center;vertical-align: top;"|70064<br>11.067 | ||

| + | | style="text-align: center;vertical-align: top;"|314627<br>12.544 | ||

| + | | style="text-align: center;vertical-align: top;"|810204<br>0.117 | ||

| + | | style="text-align: center;vertical-align: top;"|15246<br>0.059 | ||

| + | | style="text-align: center;vertical-align: top;"|317540<br>0.0067 | ||

| + | |} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | < | + | The important node ranking is relatively stable when used with different values of <math>\varepsilon</math>. As the simplest and most intuitive measures that can be used in network analysis, the ''ODC ''and <math> IDC </math> have low accuracy. The node sort result of <math>PR</math> is a good one, but there are two main shortcomings: (1) without the commitment function in <math>PR</math>, all links have the same weight and importance. The PR is distributed by its outdegree and gives no considerations to the strength of the interaction. (2) No sentiment analysis to identify the effective opinion leaders. After ranking, we analyzed the ratio of negative emotions <math>(C(y\to x) \le 0)</math> for the selected node (e.g., ID22614). Due to 73% of the commitment functions being less than 0, the node is an active user but not a positive advocate, which helps to control the spread of false information as well as in public opinion analysis and other follow-up work. |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| + | The <math> SWNP</math> can identify key persons in the specific topic, so it cannot be evaluated by the typical methods, such as Google’s search engine or the users ranking list by computing click rate. To further confirm the stability of the algorithm, the top 10 users in different <math>PTSN</math> are used to analyze their community duties and real occupational information. By checking and calculating though artificial verification, a high level of accuracy is maintained. | ||

| − | + | ==6. Conclusions== | |

| − | + | Two main independent approaches are provided in the paper for identifying key persons in online Forum: (i) discovery of the persistent topics and (ii) extraction of the key person using <math> SWNP</math>. Identifying persistent topics mainly combines the ''LDA'' model and similarity model on the timeline. <math> SWNP</math> is a new method of node position analysis, which takes into account both the node position of the neighbors and the strength and emotional tendency of connections between network nodes. The data are from Tianya forum, as indicated in Section 5. The experiment shows that the number of persistent topics is far less than urgent topics, and most of them exist for approximately 3 months with uniform distribution on the timeline. In the established ''PTSN'', the high influence persons are extracted through the <math> SWNP</math> iterative calculation and have been analyzed by contrast experiment and artificial verification. The weighted sentiment in <math> SWNP</math> mainly reflects that the emotional intensity can be converted to the number of comments, which changes the value of the commitment function and the iterative results. In addition, negative emotions can be used to alter the notion of the key persons to a certain extent, such as discovery of the different ideas of factions, online water armies and false advertisement publishers. | |

| − | = | + | ==Funding== |

| − | + | The work was supported by the Self-funded project of Harbin Science and Technology Bureau(2022ZCZJCG023,2023ZCZJCG006),the Harbin Social Science Federation special topic(2023HSKZ10),the Research Project of Education Department of Jilin Province (No:JJKH20210674KJ and No:JJKH20220445KJ) and 2022 Science and Technology Department of Jilin Province(20230101243JC). | |

| − | == | + | ==References== |

| − | + | <div class="auto" style="text-align: left;width: auto; margin-left: auto; margin-right: auto;font-size: 85%;"> | |

| − | + | [1] Kulunk A., Kalkan S.C., Bakirci A., et al. Session-based recommender system for social networks' Forum platform. 2020 28th Signal Processing and Communications Applications Conference (SIU), pp. 1-4, 2020. | |

| − | + | [2] Lu D., Lixin D. Sentiment analysis in Chinese BBS. Intelligence Computation and Evolutionary Computation, pp. 869-873, 2013. | |

| − | + | [3] Wasserman S., Faust K. Social network analysis: Methods and applications. Cambridge University Press, New York, 1994. | |

| − | + | [4] Liu H., Li B.W. Hot topic detection research of internet public opinion based on affinity propagation clustering. In: He X., Hua E., Lin Y., Liu X. (eds) Computer Informatics Cybernetics and Applications, Vol. 107, pp. 261-269, 2012. | |

| − | + | [5] Zhang F., Si G.Y., Pi L. Study on rumor spreading model based on evolution game. Journal of System Simulation, pp. 1772-1775, 2011. | |

| − | + | [6] Gregory A.L., Piff P.K. Finding uncommon ground: Extremist online forum engagement predicts integrative complexity. PLOS ONE, 16(1):e0245651, 2021. | |

| − | + | [7] Liu J., Cao Z., Cui K., Xie F. Identifying important users in sina microblog. Multimedia 2012 Fourth International Conference on IEEE, Nanjing, China, pp. 839-842, 2012. | |

| − | + | [8] Wang Wei, “The Study of Topic Diffusion State Presentation and Trend Prediction within BBS,” ''Procedia Engineering'', vol.29, 2012, pp.2995-3001. | |

| − | + | [9] Z. Bu, Z. Xia, J. Wang, “A sock puppet detection algorithm on virtual spaces,” Knowledge Based Syst. 37.2013 :366-377. | |

| − | + | [10] Carrington P., Scott J., Wasserman S., “Models and methods in Social Network Analysis,” ''Cabrige'' ''University Press, Cambrige'', 2005. | |

| − | + | [11] Quan XJ, Liu G, Lu Z, Ni XL, Liu WY. “Short text similarity based on probabilistic topics,” ''Knowledge and Information Systems'', vol.25, 2010,pp.473-491. | |

| − | ''' | + | [12] Kazienko, P., Musiał, K. and Zgrzywa, A, “Evaluation of Node Position Based on Email Communication,” ''Control and Cybernetics'', 38 (1), 2009, pp.67-86. |

Latest revision as of 10:54, 21 December 2023

Abstract

The influence of users on online Forum should not be simply determined by the global network topology but rather in the corresponding local network with the user’s active range and semantic relation. Current analysis methods mostly focus on urgent topics while ignoring persistent topics, but persistent topics often have important implications for public opinion analysis. Therefore, this paper explores key person analysis in persistent topics on online Forum based on semantics. First, the interaction data are partitioned into subsets according to month, and the Latent Dirichlet Allocation (LDA) and filtering strategy are used to identify the topics from each partition. Then, we try to associate one topic with the adjacent time slice, which fulfills the criterion of having high similarity degree. On the basis of such topics, persistent topics are defined that exist for a sufficient number of periods. Following this, the paper provides the commitment function update criteria for the persistent topic social network (PTSN) based on the semantic and the sentiment weighted node position (SWNP) to identify the key person who has the most influence in the field. Finally, the emotional tendency analysis is applied to correct the results. The methods in real data sets validate the effectiveness of these methods.

Keywords: Online Forum, persistent topic, key person, social network analysis

1. Introduction

As an electronic information service system on the Internet, an online Forum provides a public electronic forum on which each user can post messages and put forward views [1]. Online Forum gathers many users who are willing to share their experiences, information and ideas, and a user can browse others’ information and publish his/her own to form a thread through a unique registration ID [2].